The Replication Crisis: Flaws in Mainstream Science

2013 discussion of how systemic biases in science, particularly medicine and psychology, have resulted in a research literature filled with false positives and exaggerated effects, called ‘the Replication Crisis’.

Long-standing problems in standard scientific methodology have exploded as the “Replication Crisis”: the discovery that many results in fields as diverse as psychology, economics, medicine, biology, and sociology are in fact false or quantitatively highly inaccurately measured. I cover here a handful of the issues and publications on this large, important, and rapidly developing topic up to about 201313ya, at which point the Replication Crisis became too large a topic to cover more than cursorily.

The crisis is caused by methods & publishing procedures which interpret random noise as important results, far too small datasets, selective analysis by an analyst trying to reach expected/desired results, publication bias, poor implementation of existing best-practices, nontrivial levels of research fraud, software errors, philosophical beliefs among researchers that false positives are acceptable, neglect of known confounding like genetics, and skewed incentives (financial & professional) to publish ‘hot’ results.

Thus, any individual piece of research typically establishes little. Scientific validation comes not from small p-values, but from discovering a regular feature of the world which disinterested third parties can discover with straightforward research done independently on new data with new procedures—replication.

Mainstream science is flawed: seriously mistaken statistics combined with poor incentives has led to masses of misleading research. Not that this problem is exclusive to psychology—economics, certain genetics subfields (principally candidate-gene research), biomedical science, and biology in general are often on shaky ground.

NHST and Systematic Biases

Statistical background on p-value problems: Against null-hypothesis statistical-significance testing

The basic nature of ‘significance’ being usually defined as p < 0.05 means we should expect something like >5% of studies or experiments to be bogus (optimistically), but that only considers “false positives”; reducing “false negatives” requires statistical power (weakened by small samples), and the two combine with the base rate of true underlying effects into a total error rate. 2005 points out that considering the usual p values, the underpowered nature of many studies, the rarity of underlying effects, and a little bias, even large randomized trials may wind up with only an 85% chance of having yielded the truth. One survey of reported p-values in medicine yielding a lower bound of false positives of 17%.

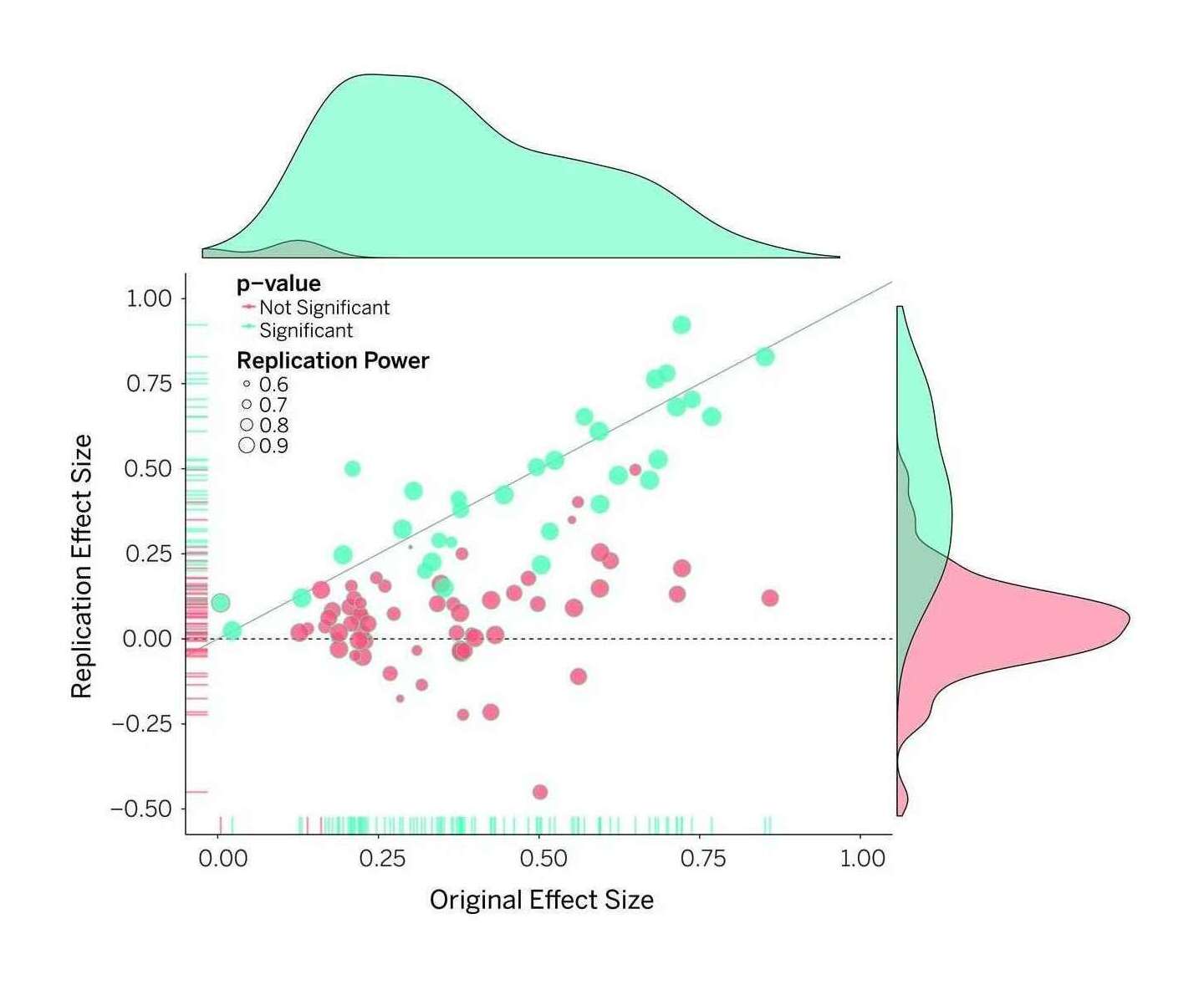

Open Science 2015: “Figure 1: Original study effect size versus replication effect size (correlation coefficients). Diagonal line represents replication effect size equal to original effect size. Dotted line represents replication effect size of 0. Points below the dotted line were effects in the opposite direction of the original. Density plots are separated by statistically-significant (blue) and non-statistically-significant (red) effects.”

Yet, there are too many positive results1 (psychiatry, neurobiology biomedicine, biology, ecology & evolution, psychology 12 3 4 5, economics’ top journals, sociology, gene-disease correlations) given effect sizes (and positive results correlate with per capita publishing rates in US states & vary by period & country—apparently chance is kind to scientists who must publish a lot and recently!); then there come the inadvertent errors which might cause retraction, which is rare, but the true retraction rate may be 0.1–1% (“How many scientific papers should be retracted?”), is increasing & seems to positively correlate with journal prestige metrics (modulo the confounding factor that famous papers/journals get more scrutiny), not that anyone pays any attention to such things; then there are basic statistical errors in >11% of papers (based on the high-quality papers in Nature and the British Medical Journal; “Incongruence between test statistics and P values in medical papers”, García-2004) or 50% in neuroscience.

And only then can we get into replicating at all. See for example The Atlantic article “Lies, Damned Lies, and Medical Science” on John P. A. Ioannidis’s research showing 41% of the most cited medical research failed to be replicated—were wrong. For details, you can see Ioannidis’s “Why Most Published Research Findings Are False”2, or Begley’s failed attempts to replicate 47 of 53 articles on top cancer journals (leading to Booth’s “Begley’s Six Rules”; see also the Nature Biotechnology editorial & note that full details have not been published because the researchers of the original studies demanded secrecy from Begley’s team), or 2011’s “Health Care Myth Busters: Is There a High Degree of Scientific Certainty in Modern Medicine?” who write ‘We could accurately say, “Half of what physicians do is wrong,” or “Less than 20% of what physicians do has solid research to support it.”’ Nutritional epidemiology is something of a fish in a barrel; after Ioannidis, is anyone surprised that when 2011 followed up on 52 correlations tested in 12 RCTs, 0⁄52 replicated and the RCTs found the opposite of 5?

Attempts to use animal models to infer anything about humans suffer from all the methodological problems previously mentioned, and add in interesting new forms of error such as mice simply being irrelevant to humans, leading to cases like <150 sepsis clinical trials all failing—because the drugs worked in mice but humans have a completely different set of genetic reactions to inflammation.

‘Hot’ fields tend to be new fields, which brings problems of its own, see “Large-Scale Assessment of the Effect of Popularity on the Reliability of Research” & discussion. (Failure to replicate in larger studies seems to be a hallmark of biological/medical research. Ioannidis performs the same trick with biomarkers, finding less than half of the most-cited biomarkers were even statistically-significant in the larger studies. 12 of the more prominent SNP-IQ correlations failed to replicate on a larger data.) As we know now, almost the entire candidate-gene literature, most things reported from 2000–10201016ya before large-scale GWASes started to be done (and completely failing to find the candidate-genes), is nothing but false positives! The replication rates of candidate-genes for things like intelligence, personality, gene-environment interactions, psychiatric disorders–the whole schmeer—are literally ~0%. On the plus side, the parlous state of affairs means that there are some cheap heuristics for detecting unreliable papers—simply asking for data & being refused/ignored correlates strongly with the original paper having errors in its statistics.

This epidemic of false positives is apparently deliberately and knowing accepted by epidemiology; 2008 “Everything is Dangerous” remarks that 80–90% of epidemiology’s claims do not replicate (eg. the NIH ran 20 randomized-controlled-trials of claims, and only 1 replicated) and that lack of ‘multiple comparisons’ (either Bonferroni or Benjamin-Hochberg) is taught: “Rothman (199036ya) says no correction for multiple testing is necessary and Vandenbroucke, PLoS Med (200818ya) agrees” (see also 1998 who also explicitly understands that no correction increases type 2 errors and reduces type 1 errors). Multiple correction is necessary because its absence does, in fact, result in the overstatement of medical benefit (1985, et al 1987, 1987). The average effect size for findings confirmed meta-analytically in psychology/education is d = 0.53 (well below several effect sizes from n-back/IQ studies); when moving from laboratory to non-laboratory settings, meta-analyses replicate findings correlate ~0.7 of the time, but for social psychology the replication correlation falls to ~0.5 with >14% of findings actually turning out to be the opposite (see et al 1999 and 2012; for exaggeration due to non-blinding or poor randomization, et al 2008). (Meta-analyses also give us a starting point for understanding how unusual medium or large effects sizes are4.) Psychology does have many challenges, but practitioners also handicap themselves; an older overview is the entertaining “What’s Wrong With Psychology, Anyway?”, which mentions the obvious point that statistics & experimental design are flexible enough to reach significance as desired. In an interesting example of how methodological reforms are no panacea in the presence of continued perverse incentives, an earlier methodological improvement in psychology (reporting multiple experiments in a single publication as a check against results not being generalizable) has merely demonstrated the widespread p-value hacking or manipulation or publication bias when one notes that given the low statistical power of each experiment, even if the underlying phenomena were real it would still be wildly improbable that all n experiments in a paper would turn up statistically-significant results, since power is usually extremely low in experiments (eg. in neuroscience, “between 20–30%”). These problems are pervasive enough that I believe they entirely explain any “decline effects”5.

The failures to replicate “statistically-significant” results has led one blogger to caustically remark (see also “Parapsychology: the control group for science”, “Using degrees of freedom to change the past for fun and profit”, “The Control Group is Out Of Control”):

Parapsychology, the control group for science, would seem to be a thriving field with “statistically-significant” results aplenty…Parapsychologists are constantly protesting that they are playing by all the standard scientific rules, and yet their results are being ignored—that they are unfairly being held to higher standards than everyone else. I’m willing to believe that. It just means that the standard statistical methods of science are so weak and flawed as to permit a field of study to sustain itself in the complete absence of any subject matter. With two-thirds of medical studies in prestigious journals failing to replicate, getting rid of the entire actual subject matter would shrink the field by only 33%.

…Let me draw the moral [about publication bias]. Even if the community of inquiry is both too clueless to make any contact with reality and too honest to nudge borderline findings into significance, so long as they can keep coming up with new phenomena to look for, the mechanism of the file-drawer problem alone will guarantee a steady stream of new results. There is, so far as I know, no Journal of Evidence-Based Haruspicy filled, issue after issue, with methodologically-faultless papers reporting the ability of sheep’s livers to predict the winners of sumo championships, the outcome of speed dates, or real estate trends in selected suburbs of Chicago. But the difficulty can only be that the evidence-based haruspices aren’t trying hard enough, and some friendly rivalry with the plastromancers is called for. It’s true that none of these findings will last forever, but this constant overturning of old ideas by new discoveries is just part of what makes this such a dynamic time in the field of haruspicy. Many scholars will even tell you that their favorite part of being a haruspex is the frequency with which a new sacrifice over-turns everything they thought they knew about reading the future from a sheep’s liver! We are very excited about the renewed interest on the part of policy-makers in the recommendations of the mantic arts…

And this is when there is enough information to replicate; open access to any data for a paper is rare (economics: <10%) the economics journal Journal of Money, Credit and Banking, which required researchers provide the data & software which could replicate their statistical analyses, discovered that <10% of the submitted materials were adequate for repeating the paper (see “Lessons from the JMCB Archive”). In one cute economics example, replication failed because the dataset had been heavily edited to make participants look better (for more economics-specific critique, see 2013). Availability of data, often low, decreases with time, and many studies never get published regardless of whether publication is legally mandated.

Transcription errors in papers seem to be common (possibly due to constantly changing analyses & p-hacking?), and as software and large datasets becomes more inherent to research, the need and the problem of it being possible to replicate will get worse because even mature commercial software libraries can disagree majorly on their computed results to the same mathematical specification (see also et al 2009). And spreadsheets are especially bad, with error rates in the 88% range (“What we know about spreadsheet errors”, 1998); spreadsheets are used in all areas of science, including biology and medicine (see “Error! What biomedical computing can learn from its mistakes”; famous examples of coding errors include Donohue-Levitt & Reinhart-Rogoff), not to mention regular business (eg. the London Whale).

Psychology is far from being perfect either; look at the examples in The New Yorker’s “The Truth Wears Off” article. Computer scientist Peter Norvig has written a must-read essay on interpreting statistics, “Warning Signs in Experimental Design and Interpretation”; a number of warning signs apply to many psychological studies. There may be incentive problems: a transplant researcher discovered the only way to publish in Nature his inability to replicate his earlier Nature paper was to officially retract it; another interesting example is when, after Daryl Bem got a paper published in the top journal JPSP demonstrating precognition, the journal refused to publish any replications (failed or successful) because… “‘We don’t want to be the Journal of Bem Replication’, he says, pointing out that other high-profile journals have similar policies of publishing only the best original research.” (Quoted in New Scientist) One doesn’t need to be a genius to understand why psychologist Andrew D. Wilson might snarkily remark “…think about the message JPSP is sending to authors. That message is ‘we will publish your crazy story if it’s new, but not your sensible story if it’s merely a replication’.” (You get what you pay for.) In one large test of the most famous psychology results, 10 of 13 (77%) replicated. The replication rate is under 1⁄3 in one area of psychology touching on genetics. This despite the simple point that replications reduce the risk of publication bias, and increase statistical power, so that a replicated result is more likely to be true. And the small samples of n-back studies and nootropic chemicals are especially problematic. Quoting from Nick Bostrom & Anders Sandberg’s 200620ya “Converging Cognitive Enhancements”:

The reliability of research is also an issue. Many of the cognition-enhancing interventions show small effect sizes, which may necessitate very large epidemiological studies possibly exposing large groups to unforeseen risks.

Particularly troubling is the slowdown in drug discovery & medical technology during the 2000s, even as genetics in particular was expected to produce earth-shaking new treatments. One biotech venture capitalist writes:

The company spent $7.76$52011M or so trying to validate a platform that didn’t exist. When they tried to directly repeat the academic founder’s data, it never worked. Upon re-examination of the lab notebooks, it was clear the founder’s lab had at the very least massaged the data and shaped it to fit their hypothesis. Essentially, they systematically ignored every piece of negative data. Sadly this “failure to repeat” happens more often than we’d like to believe. It has happened to us at Atlas [Venture] several times in the past decade…The unspoken rule is that at least 50% of the studies published even in top tier academic journals—Science, Nature, Cell, PNAS, etc..—can’t be repeated with the same conclusions by an industrial lab. In particular, key animal models often don’t reproduce. This 50% failure rate isn’t a data free assertion: it’s backed up by dozens of experienced R&D professionals who’ve participated in the (re)testing of academic findings. This is a huge problem for translational research and one that won’t go away until we address it head on.

Half the respondents to a 201214ya survey at one cancer research center reported 1 or more incidents where they could not reproduce published research; two-thirds of those were unable to “ever able to explain or resolve their discrepant findings”, half had trouble publishing results contradicting previous publications, and two-thirds failed to publish contradictory results. An internal Bayer survey of 67 projects (commentary) found that “only in ~20–25% of the projects were the relevant published data completely in line with our in-house findings”, and as far as assessing the projects went:

…despite the low numbers, there was no apparent difference between the different research fields. Surprisingly, even publications in prestigious journals or from several independent groups did not ensure reproducibility. Indeed, our analysis revealed that the reproducibility of published data did not significantly correlate with journal impact factors, the number of publications on the respective target or the number of independent groups that authored the publications. Our findings are mirrored by ‘gut feelings’ expressed in personal communications with scientists from academia or other companies, as well as published observations. [apropos of above] An unspoken rule among early-stage venture capital firms that “at least 50% of published studies, even those in top-tier academic journals, can’t be repeated with the same conclusions by an industrial lab” has been recently reported (see Further information) and discussed 4.

Physics has relatively small sins; “Assessing uncertainty in physical constants” (1985); Hanson’s summary:

Looking at 306 estimates for particle properties, 7% were outside of a 98% confidence interval (where only 2% should be). In seven other cases, each with 14 to 40 estimates, the fraction outside the 98% confidence interval ranged from 7% to 57%, with a median of 14%.

Nor is peer review reliable or robust against even low levels of collusion. Scientists who win the Nobel Prize find their other work suddenly being heavily cited, suggesting either that the community either badly failed in recognizing the work’s true value or that they are now sucking up & attempting to look better by association. (A mathematician once told me that often, to boost a paper’s acceptance chance, they would add citations to papers by the journal’s editors—a practice that will surprise none familiar with Goodhart’s law and the use of citations in tenure & grants.)

The former editor Richard Smith amusingly recounts his doubts about the merits of peer review as practiced, and physicist Michael Nielsen points out that peer review is historically rare (just one of Einstein’s 300 papers was peer reviewed; the famous Nature did not institute peer review until 196759ya), has been poorly studied & not shown to be effective, is nationally biased, erroneously rejects many historic discoveries (one study lists “34 Nobel Laureates whose awarded work was rejected by peer review”; 1990 lists other), and catches only a small fraction of errors. And questionable choices or fraud? Forget about it:

A pooled weighted average of 1.97% (n = 7, 95%CI: 0.86–4.45) of scientists admitted to have fabricated, falsified or modified data or results at least once—a serious form of misconduct by any standard—and up to 33.7% admitted other questionable research practices. In surveys asking about the behavior of colleagues, admission rates were 14.12% (n = 12, 95% CI: 9.91–19.72) for falsification, and up to 72% for other questionable research practices…When these factors were controlled for, misconduct was reported more frequently by medical/pharmacological researchers than others.

And psychologists:

We surveyed over 2,000 psychologists about their involvement in questionable research practices, using an anonymous elicitation format supplemented by incentives for honest reporting. The impact of incentives on admission rates was positive, and greater for practices that respondents judge to be less defensible. Using three different estimation methods, we find that the proportion of respondents that have engaged in these practices is surprisingly high relative to respondents’ own estimates of these proportions. Some questionable practices may constitute the prevailing research norm.

In short, the secret sauce of science is not ‘peer review’. It is replication!

Systemic Error Doesn’t Go Away

Far better an approximate answer to the right question, which is often vague, than an exact answer to the wrong question, which can always be made precise.

Why isn’t the solution as simple as eliminating datamining by methods like larger n or pre-registered analyses? Because once we have eliminated the random error in our analysis, we are still left with a (potentially arbitrarily large) systematic error, leaving us with a large total error.

None of these systematic problems should be considered minor or methodological quibbling or foolish idealism: they are systematic biases and as such, they force an upper bound on how accurate a corpus of studies can be even if there were thousands upon thousands of studies, because the total error in the results is made up of random error and systematic error, but while random error shrinks as more studies are done, systematic error remains the same.

A thousand biased studies merely result in an extremely precise estimate of the wrong number.

This is a point appreciated by statisticians and experimental physicists, but it doesn’t seem to be frequently discussed. Andrew Gelman has a fun demonstration of selection bias involving candy, or from pg812–1020 of Chapter 8 “Sufficiency, Ancillarity, And All That” of Probability Theory: The Logic of Science by E.T. Jaynes:

The classical example showing the error of this kind of reasoning is the fable about the height of the Emperor of China. Supposing that each person in China surely knows the height of the Emperor to an accuracy of at least ±1 meter, if there are N = 1,000,000,000 inhabitants, then it seems that we could determine his height to an accuracy at least as good as

(8-49)

merely by asking each person’s opinion and averaging the results.

The absurdity of the conclusion tells us rather forcefully that the √N rule is not always valid, even when the separate data values are causally independent; it requires them to be logically independent. In this case, we know that the vast majority of the inhabitants of China have never seen the Emperor; yet they have been discussing the Emperor among themselves and some kind of mental image of him has evolved as folklore. Then knowledge of the answer given by one does tell us something about the answer likely to be given by another, so they are not logically independent. Indeed, folklore has almost surely generated a systematic error, which survives the averaging; thus the above estimate would tell us something about the folklore, but almost nothing about the Emperor.

We could put it roughly as follows:

error in estimate = (8-50)

where S is the common systematic error in each datum, R is the RMS ‘random’ error in the individual data values. Uninformed opinions, even though they may agree well among themselves, are nearly worthless as evidence. Therefore sound scientific inference demands that, when this is a possibility, we use a form of probability theory (ie. a probabilistic model) which is sophisticated enough to detect this situation and make allowances for it.

As a start on this, equation (8-50) gives us a crude but useful rule of thumb; it shows that, unless we know that the systematic error is less than about 1⁄3 of the random error, we cannot be sure that the average of a million data values is any more accurate or reliable than the average of ten6. As Henri Poincaré put it: “The physicist is persuaded that one good measurement is worth many bad ones.” This has been well recognized by experimental physicists for generations; but warnings about it are conspicuously missing in the “soft” sciences whose practitioners are educated from those textbooks.

Or pg1019–1020 Chapter 10 “Physics of ‘Random Experiments’”:

…Nevertheless, the existence of such a strong connection is clearly only an ideal limiting case unlikely to be realized in any real application. For this reason, the law of large numbers and limit theorems of probability theory can be grossly misleading to a scientist or engineer who naively supposes them to be experimental facts, and tries to interpret them literally in his problems. Here are two simple examples:

Suppose there is some random experiment in which you assign a probability p for some particular outcome A. It is important to estimate accurately the fraction f of times A will be true in the next million trials. If you try to use the laws of large numbers, it will tell you various things about f; for example, that it is quite likely to differ from p by less than a tenth of one percent, and enormously unlikely to differ from p by more than one percent. But now, imagine that in the first hundred trials, the observed frequency of A turned out to be entirely different from p. Would this lead you to suspect that something was wrong, and revise your probability assignment for the 101’st trial? If it would, then your state of knowledge is different from that required for the validity of the law of large numbers. You are not sure of the independence of different trials, and/or you are not sure of the correctness of the numerical value of p. Your prediction of f for a million trials is probably no more reliable than for a hundred.

The common sense of a good experimental scientist tells him the same thing without any probability theory. Suppose someone is measuring the velocity of light. After making allowances for the known systematic errors, he could calculate a probability distribution for the various other errors, based on the noise level in his electronics, vibration amplitudes, etc. At this point, a naive application of the law of large numbers might lead him to think that he can add three significant figures to his measurement merely by repeating it a million times and averaging the results. But, of course, what he would actually do is to repeat some unknown systematic error a million times. It is idle to repeat a physical measurement an enormous number of times in the hope that “good statistics” will average out your errors, because we cannot know the full systematic error. This is the old “Emperor of China” fallacy…

Indeed, unless we know that all sources of systematic error—recognized or unrecognized—contribute less than about one-third the total error, we cannot be sure that the average of a million measurements is any more reliable than the average of ten. Our time is much better spent in designing a new experiment which will give a lower probable error per trial. As Poincaré put it, “The physicist is persuaded that one good measurement is worth many bad ones.”7 In other words, the common sense of a scientist tells him that the probabilities he assigns to various errors do not have a strong connection with frequencies, and that methods of inference which presuppose such a connection could be disastrously misleading in his problems.

Schlaifer much earlier made the same point in Probability and Statistics for Business Decisions: an Introduction to Managerial Economics Under Uncertainty, 1959, pg488–489 (see also 2018/Shirani- et al 2018):

31.4.3 Bias and Sample Size

In §31.2.6 we used a hypothetical example to illustrate the implications of the fact that the variance of the mean of a sample in which bias is suspected is

so that only the second term decreases as the sample size increases and the total can never be less than the fixed value of the first term. To emphasize the importance of this point by a real example we recall the most famous sampling fiasco in history, the presidential poll conducted by the Literary Digest in 1936. Over 2 million registered voters filled in and returned the straw ballots sent out by the Digest, so that there was less than one chance in 1 billion of a sampling error as large as 2⁄10 of one percentage point8, and yet the poll was actually off by nearly 18 percentage points: it predicted that 54.5 per cent of the popular vote would go to Landon, who in fact received only 36.7 per cent.9 10

Since sampling error cannot account for any appreciable part of the 18-point discrepancy, it is virtually all actual bias. A part of this total bias may be measurement bias due to the fact that not all people voted as they said they would vote; the implications of this possibility were discussed in §31.3. The larger part of the total bias, however, was almost certainly selection bias. The straw ballots were mailed to people whose names were selected from lists of owners of telephones and automobiles and the subpopulation which was effectively sampled was even more restricted than this: it consisted only of those owners of telephones and automobiles who were willing to fill out and return a straw ballot. The true mean of this subpopulation proved to be entirely different from the true mean of the population of all United States citizens who voted in 193690ya.

It is true that there was no evidence at the time this poll was planned which would have suggested that the bias would be as great as the 18 percentage points actually realized, but experience with previous polls had shown biases which would have led any sensible person to assign to a distribution with equal to at least 1 percentage point. A sample of only 23,760 returned ballots, one 1⁄100th the size actually used, would have given a value of only 1⁄3 percentage point, so that the standard deviation of x would have been

percentage points. Using a sample 100 times this large reduced σ(ε) from 1⁄3 point to virtually zero, but it could not affect and thus on the most favorable assumption could reduce σ(x) only from 1.05 points to 1 point. To collect and tabulate over 2 million additional ballots when this was the greatest gain that could be hoped for was obviously ridiculous before the fact and not just in the light of hindsight.

What’s particularly sad is when people read something like this and decide to rely on anecdotes, personal experiments, and alternative medicine where there are even more systematic errors and no way of reducing random error at all! Science may be the lens that sees its own flaws, but if other epistemologies do not boast such long detailed self-critiques, it’s not because they are flawless… It’s like that old Jamie Zawinski quote: Some people, when faced with the problem of mainstream medicine & epidemiology having serious methodological weaknesses, say “I know, I’ll turn to non-mainstream medicine & epidemiology. After all, if only some medicine is based on real scientific method and outperforms placebos, why bother?” (Now they have two problems.) Or perhaps Isaac Asimov: “John, when people thought the earth was flat, they were wrong. When people thought the earth was spherical, they were wrong. But if you think that thinking the earth is spherical is just as wrong as thinking the earth is flat, then your view is wronger than both of them put together.”

See Also

Why Correlation Usually ≠ Causation: Causal Nets Cause Common Confounding

Questionable Research Findings:

Appendix

Additional Links

A bibliography of additional links to papers/blogs/articles on the Replication Crisis, primarily post-2013 and curated from my newsletter, as a followup to the main article text describing the Replication Crisis.

blogs:

blood sugar/willpower: “An opportunity cost model of subjective effort and task performance”, et al 2013; “A Meta-Analysis of Blood Glucose Effects on Human Decision Making”, 2016; “Is Ego-Depletion a Replicable Effect? A Forensic Meta-Analysis of 165 Ego Depletion Articles”; “Eyes wide shut or eyes wide open?”, Roberts commentary; “Ego depletion may disappear by 2020”, 2019; “A Multisite Preregistered Paradigmatic Test of the Ego-Depletion Effect”, et al 2021

“Romance, Risk, and Replication: Can Consumer Choices and Risk-Taking be Primed by Mating Motives?”, et al 2015

“Do Certain Countries Produce Only Positive Results? A Systematic Review of Controlled Trials”, et al 1998

“Randomized Controlled Trials Commissioned by the Institute of Education Sciences Since 200224ya: How Many Found Positive Versus Weak or No Effects?”, Coalition for Evidence-Based 2013

“Theory-testing in psychology and physics: a methodological paradox”, 1967

“If correlation doesn’t imply causation, then what does?”, Michael Nielsen

“Teachers and Income: What Did the Project STAR Kindergarten Study Really Find?”

“Deep impact: unintended consequences of journal rank”, et al 2013 (meta-science)

“Publication bias in the social sciences: Unlocking the file drawer”, et al 2014 (Nature; Andrew Gelman)

“On the science and ethics of Ebola treatments”, “Controlled trials: the 194878ya watershed”

“Academic urban legends”, 2014

“School Desegregation and Black Achievement: an integrative review”, 1985

stereotype threat: “An Examination of Stereotype Threat Effects on Girls’ Mathematics Performance”, et al 2013; “The influence of gender stereotype threat on mathematics test scores of Dutch high school students: a registered report”, et al 2019;“Stereotype Threat Effects in Settings With Features Likely Versus Unlikely in Operational Test Settings: A Meta-Analysis”, et al 2019

“In half of the [placebo-controlled] studies, the results provide evidence against continued use of the investigated surgical procedures.” (media)

“Deliberate practice: Is that all it takes to become an expert?”, et al 2014

“Whither the Blank Slate? A Report on the Reception of Evolutionary Biological Ideas among Sociological Theorists”, et al 2014 (media)

“Bayesian data analysis”, 2010

“The harm done by tests of significance”, 2004 (how p-values increase traffic fatalities)

“Suppressing Intelligence Research: Hurting Those We Intend to Help”, 2005

“Social Psychology as History”, 1973; “Political Diversity Will Improve Social Psychological Science”, et al 2015; “Implications of ideological bias in social psychology on clinical practice”, et al 2020 (liberal ideological homogeneity in social sciences causing problems)

“Contradicted and Initially Stronger Effects in Highly Cited Clinical Research”, 2005

“Non-replicable publications are cited more than replicable ones”, Serra-2021

“A ‘Sham Procedure’ Leads to Disappointing Multiple Sclerosis News”

“What Went Wrong? Reflections on Science by Observation and The Bell Curve”, 1998 (correlation & causation)

“Effects of remote, retroactive intercessory prayer on outcomes in patients with bloodstream infection: randomized controlled trial”, 2001 (Norvig comments)

“Scholarly Context Not Found: One in Five Articles Suffers from Reference Rot”, et al 2014

“Should Psychological Neuroscience Research Be Funded?” (discussion)

“Interpreting observational studies: why empirical calibration is needed to correct p-values”, et al 2012

“Compliance with Results Reporting at ClinicalTrials.gov”, et al 2015 (incentives matter)

“Estimating the reproducibility of psychological science”, Open Science 2015

“Many Labs 3: Evaluating participant pool quality across the academic semester via replication” (commentary)

“Most Published Research Findings Are False—But a Little Replication Goes a Long Way”, 2007

“Mice Fall Short as Test Subjects for Some of Humans’ Deadly Ills”

“Predictive modeling, data leakage, model evaluation” (a researcher is just a very big neural net model)

“The Economics of Reproducibility in Preclinical Research”, et al 2015

“An Alternative to Null-Hypothesis Significance Tests: Prep”, 2005

“Effect of monthly vitamin D3 supplementation in healthy adults on adverse effects of earthquakes: a randomized controlled trial”, et al 2014 (if only more people could be this imaginative in thinking about how to run RCTs, and less defeatist)

“Does ocean acidification alter fish behavior? Fraud allegations create a sea of doubt”

“How One Man Poisoned a City’s Water Supply (and Saved Millions of Children’s Lives in the Process)” (on John L. Leal & water chlorination)

“Reanalyses of Randomized Clinical Trial Data”, et al 2014

“The Null Ritual: What You Always Wanted to Know About Significance Testing but Were Afraid to Ask”, et al 2004

“The Bayesian Reproducibility Project” (interpreting the psychology reproducibility projects’ results not by uninterpretable p-values but by a direct Bayesian examination of whether the replications support or contradict the originals); “Statistical Methods for Replicability Assessment”, 2019

“The Most Dangerous Equation” (sample size and standard error; examples: coinage, disease rates, small schools, male test scores.)

“When Quality Beats Quantity: Decision Theory, Drug Discovery, and the Reproducibility Crisis”, 2016; “Artificial intelligence in drug discovery: what is realistic, what are illusions? Part 1: Ways to make an impact, and why we are not there yet: Quality is more important than speed and cost in drug discovery”, Bender & Cortés-2021

“Online Controlled Experiments: Introduction, Learnings, and Humbling Statistics”, 2012

“Underreporting in Psychology Experiments: Evidence From a Study Registry”, et al 2015 (previously: et al 2014)

“The Scientific Impact of Positive and Negative Phase 3 Cancer Clinical Trials”, et al 2016

“The Stress Test: Rivalries, intrigue, and fraud in the world of stem-cell research”

“Correlation and Causation in the Study of Personality”, 2012

-

“Is Bilingualism Really an Advantage?”; “The Bitter Fight Over the Benefits of Bilingualism”; “The Bilingual Advantage: Three Years Later—Where do things stand in this area of research?”

“Is bilingualism associated with enhanced executive functioning in adults? A meta-analytic review”, et al 2018

“No evidence for a bilingual executive function advantage in the ABCD study”, et al 2018

“The importance of ‘gold standard’ studies for consumers of research”

“Seven Pervasive Statistical Flaws in Cognitive Training Interventions”, et al 2016

“Could a neuroscientist understand a microprocessor?”, 2016 (amusing followup to “Can a biologist fix a radio?”, 2002)

“Statistically Controlling for Confounding Constructs Is Harder than You Think”, 2016 (See also 1936/1942/1965.)

“Why It Took Social Science Years to Correct a Simple Error About ‘Psychoticism’”

“Can Results-Free Review Reduce Publication Bias? The Results and Implications of a Pilot Study”, et al 2016 (on result-blind peer review)

“Science Is Not Always ‘Self-Correcting’: Fact-Value Conflation and the Study of Intelligence”, 2015 (scientific endorsement of the Noble Lie)

“How Multiple Imputation Makes a Difference”, 2016 (many political science results biased & driven by treatment of missing data)

“Probing the Improbable: Methodological Challenges for Risks with Low Probabilities and High Stakes”, et al 2008

“Predicting Experimental Results: Who Knows What?”, Della2016; “Evaluating the replicability of social science experiments in Nature and Science between 201016ya and 2015”, et al 2018; “Predicting replication outcomes in the Many Labs 2 study”, et al 2018; “Laypeople Can Predict Which Social-Science Studies Will Be Replicated Successfully”, et al 2020

“Does Reading a Single Passage of Literary Fiction Really Improve Theory of Mind? An Attempt at Replication”, et al 2016

“We Gave Four Good Pollsters the Same Raw Data. They Had Four Different Results” (random error vs systematic error)

“Close but no Nobel: the scientists who never won; Archives reveal the most-nominated researchers who missed out on a Nobel Prize” (Nobels have considerable measurement error)

“Stereotype (In)Accuracy in Perceptions of Groups and Individuals”, et al 2015

“The Statistical Crisis in Science: The Cult Of P”, 2016 (a readable overview of the problems with p-values/NHST)

“The Young Billionaire Behind the War on Bad Science” (John D. Arnold)

“Reconstruction of a Train Wreck: How Priming Research Went off the Rails”

“Meta-assessment of bias in science”, et al 2017

“Working Memory Training Does Not Improve Performance on Measures of Intelligence or Other Measures of ‘Far Transfer’: Evidence From a Meta-Analytic Review”, Melby- et al 2016

“How readers understand causal and correlational expressions used in news headlines”, et al 2017 (people do not understand the difference between correlation and causation)

“Proceeding From Observed Correlation to Causal Inference: The Use of Natural Experiments”, 2007

“Daryl Bem Proved ESP Is Real—which means science is broken” (Apparently Bem was serious. But one man’s modus ponens is another man’s modus tollens.)

“Does High Self-esteem Cause Better Performance, Interpersonal Success, Happiness, or Healthier Lifestyles?”, et al 2003; “The Man Who Destroyed America’s Ego: How a rebel psychologist challenged one of the 20th century’s biggest—and most dangerous—ideas”; “‘It was quasi-religious’: the great self-esteem con”

“Computational Analysis of Lifespan Experiment Reproducibility”, 2017 (Power analysis of Gompertz curve mortality data: as expected, most animal studies of possible life-extension drugs are severely underpowered, which has the usual implications for replication, exaggerated effect sizes, & publication bias.)

“Discontinuation and Nonpublication of Randomized Clinical Trials Conducted in Children”, et al 2016

“The prior can generally only be understood in the context of the likelihood”, et al 2017 (“They strain at the gnat of the prior who swallow the camel of the likelihood.”)

“On the Reproducibility of Psychological Science”, et al 2016

“Disappointing findings on Conditional Cash Transfers as a tool to break the poverty cycle in the United States” (results inflated by self-reported data; as the US is not Scandinavia, many studies depend on self-report.)

“No Beneficial Effects of Resveratrol on the Metabolic Syndrome: A Randomized Placebo-Controlled Clinical Trial”, et al 2017 (A big resveratrol null: zero effect, even evidence of harm.)

“‘Peer review’ is younger than you think. Does that mean it can go away?”; “Peer Review: The end of an error?”

“Randomized Controlled Trial of the Metropolitan Police Department Body-Worn Camera Program”/“A Big Test of Police Body Cameras Defies Expectations”

“‘Unbelievable’: Heart Stents Fail to Ease Chest Pain”, of Al- et al 2017

“When the music’s over. Does music skill transfer to children’s and young adolescents’ cognitive and academic skills? A Meta-Analysis”, Sala & Gobet 201

“Does Far Transfer Exist? Negative Evidence From Chess, Music, and Working Memory Training”, 2017

“Practice Does Not Make Perfect: No Causal Effect of Music Practice on Music Ability”, et al 2014

“Cognitive and academic benefits of music training with children: A multilevel meta-analysis”, 2020

“The World Might Be Better Off Without College for Everyone: Students don’t seem to be getting much out of higher education”; “Do High School Sports Build or Reveal Character?”, Ransom & Ransom 201

“The Elusive Backfire Effect: Mass Attitudes’ Steadfast Factual Adherence”, 2017 (Big failed replication: n = 10100/k = 52.)

“Randomizing Religion: The Impact of Protestant Evangelism on Economic Outcomes”, et al 2018 (How beneficial is religion to individuals?)

“Evaluation of Evidence of Statistical Support and Corroboration of Subgroup Claims in Randomized Clinical Trials”, et al 2017 (Lies, damn lies, and subgroups.)

“Inventing the randomized double-blind trial: the Nuremberg salt test of 1835”, 2006 (The first double-blind randomized pre-registered clinical trial in history was a 1835191ya German test of homeopathic salt remedies.)

“Questioning the evidence on hookworm eradication in the American South”, 2017

“Homogenous: The Political Affiliations of Elite Liberal Arts College Faculty”, 2018

“Producing Wrong Data Without Doing Anything Obviously Wrong!”, et al 2009

“Revisiting the Marshmallow Test: A Conceptual Replication Investigating Links Between Early Delay of Gratification and Later Outcomes”, et al 2018 (effect size shrinks considerably in replication and the effect appears moderated largely through the expected individual traits like IQ)

“The Impostor Cell Line That Set Back Breast Cancer Research”

“Split brain: divided perception but undivided consciousness”, et al 2017

“p-Hacking and False Discovery in A/B Testing”, et al 2018

“22 Case Studies Where Phase 2 and Phase 3 Trials had Divergent Results”, FDA 2017 (Small study biases)

“Plan to replicate 50 high-impact cancer papers shrinks to just 18”

“Researcher at the center of an epic fraud remains an enigma to those who exposed him”

“The prehistory of biology preprints: A forgotten experiment from the 1960s”, 2017 (Who killed preprints the first time? The commercial academic publishing cartel did. Copyright is why we can’t have nice things.)

“Replication Failures Highlight Biases in Ecology and Evolution Science”

“The cumulative effect of reporting and citation biases on the apparent efficacy of treatments: the case of depression”, de et al 2018 (Figure 1: the flowchart of bias)

“Evaluating replicability of laboratory experiments in economics”, et al 2016 (“About 40% of economics experiments fail replication survey”)

“Communicating uncertainty in official economic statistics”, 2015 (error from systematic bias > error from random sampling error in major economic statistics)

“Many Analysts, One Data Set: Making Transparent How Variations in Analytic Choices Affect Results”, et al 2018

“First analysis of ‘pre-registered’ studies shows sharp rise in null findings”

“Why do humans reason? Arguments for an argumentative theory”, 2011

“How to play 20 questions with nature and lose: Reflections on 100 years of brain-training research”, et al 2018

The state of ovulation evopsych research: mostly wrong, some right

“Many Labs 2: Investigating Variation in Replicability Across Sample and Setting”, et al 2018 (28 targets, k = 60, n = 7000 each, Registered Reports: 14⁄28 replication rate w/few moderators/country differences & remarkably low heterogeneity); “Generalizability of heterogeneous treatment effect estimates across samples”, et al 2018; “Heterogeneity in direct replications in psychology and its association with effect size”, Olsson-2020

“Many Labs 5: Testing Pre-Data-Collection Peer Review as an Intervention to Increase Replicability”, et al 2020

“Comparing meta-analyses and preregistered multiple-laboratory replication projects”, et al 2019

“A Star Surgeon Left a Trail of Dead Patients—and His Whistleblowers Were Punished” (organizational incentives & science)

“How Facebook and Twitter could be the next disruptive force in clinical trials”

“Five-Year Follow-up of Antibiotic Therapy for Uncomplicated Acute Appendicitis in the APPAC Randomized Clinical Trial”, et al 2018 (media; most appendicitis surgeries unnecessary—the question is never whether running an RCT is ethical, the question is how can not running it possibly be ethical?)

“Notes on a New Philosophy of Empirical Science”, 2011 (the ‘compression as inference’ paradigm of intelligence for application to science; stupid gzip tricks, 2006; stupid zpaq tricks with paqclass)

“The association between adolescent well-being and digital technology use”, 2019 (SCA: brute-forcing the ‘garden of forking paths’ to examine robustness of results—the poor man’s Bayesian model comparison)

“Most Rigorous Large-Scale Educational RCTs are Uninformative: Should We Be Concerned?”, Lortie-2019

“How Replicable Are Links Between Personality Traits and Consequential Life Outcomes? The Life Outcomes of Personality Replication Project”, 2019 (individual-differences research continues to replicate well)

“Beware the pitfalls of short-term program effects: They often fade”

“Behavior Genetic Research Methods: Testing Quasi-Causal Hypotheses Using Multivariate Twin Data”, 2014

“How genome-wide association studies (GWAS) made traditional candidate gene studies obsolete”, et al 2019 (apropos of SSC on et al 2019 revisiting the candidate-gene & gene-environment debacles)

“Objecting to experiments that compare two unobjectionable policies or treatments”, et al 2019

“Meta-Research: A comprehensive review of randomized clinical trials in three medical journals reveals 396 [13%] medical reversals”, Herrera- et al 2019

“The Big Crunch”, David 1994

“Super-centenarians and the oldest-old are concentrated into regions with no birth certificates and short lifespans”, 2019 (“a primary role of fraud and error in generating remarkable human age records”)

“How Gullible are We? A Review of the Evidence from Psychology and Social Science”, 2017

“Registered reports: an early example and analysis”, et al 2019 (parapsychology was the first field to use Registered Reports in 197650ya, which cut statistical-significance rates by 2⁄3rds: 1975a, 1975b, 197611)

“Anthropology’s Science Wars: Insights from a New Survey”, et al 2019 (visualizations)

“Effect of Lower Versus Higher Red Meat Intake on Cardiometabolic and Cancer Outcomes: A Systematic Review of Randomized Trials”, et al 2019 (“diets lower in red meat may have little or no effect on all-cause mortality (HR 0.99 [95% CI, 0.95 to 1.03])”)

“Reading Lies: Nonverbal Communication and Deception”, et al 2019

David Rosenhan’s Rosenhan experiment:

Did psychologist David Rosenhan fabricate his famous 197353ya “Being Sane in Insane Places” mental hospital exposé? (Andrew Scull/1976; Nature & et al 2020 review; on the Rosenhan experiment; interesting to consider 1975’s criticisms in light of this)

“Orchestrating false beliefs about gender discrimination”: what did the famous ‘blind orchestra audition’ study show? (Gelman commentary)

“Crowdsourcing data analysis: Do soccer referees give more red cards to dark skin toned players?” (61 analysts examine the same dataset for the same research question to see how much variation in approach determines results); “Crowdsourcing Hypothesis Tests”, et al 2019 (“200 Researchers, 5 Hypotheses, No Consistent Answers: Just how much wisdom is there in the scientific crowd?”)

“How a Publicity Blitz Created The Myth of Subliminal Advertising”, 1992

-

“‘We are not recommending you give to Texas per se’: GiveDirectly’s bold disaster-relief experiment”

“John Arnold Made a Fortune at Enron. Now He’s Declared War on Bad Science”

“Reproducibility Initiative receives $1.3M grant to validate 50 landmark cancer studies”

“The Four Most Dangerous Words? A New Study Shows”, Laura Arnold, 2017 TED talk

“Matthew Walker’s Why We Sleep Is Riddled with Scientific and Factual Errors”, Alexey Guzey

“The maddening saga of how an Alzheimer’s ‘cabal’ thwarted progress toward a cure for decades”

“A national experiment reveals where a growth mindset improves achievement”, et al 2019

“A Meta-Analysis of Procedures to Change Implicit Measures”, et al 2019; “Psychology’s Favorite Tool [the IAT] for Measuring Racism Isn’t Up to the Job: Almost two decades after its introduction, the implicit association test has failed to deliver on its lofty promises”; “The Implicit Association Test in Introductory Psychology Textbooks: Blind Spot for Controversy”, 2021

“Rational Judges, Not Extraneous Factors In Decisions”; “Impossibly Hungry Judges”

“Compliance with legal requirement to report clinical trial results on ClinicalTrials.gov: a cohort study”, et al 2020; “FDA and NIH let clinical trial sponsors keep results secret and break the law”, Science

“Producing Wrong Data Without Doing Anything Obviously Wrong!”, et al 2009

“Likelihood of Null Effects of Large NHLBI Clinical Trials Has Increased over Time”, 2015

“Variability in the analysis of a single neuroimaging dataset by many teams”, Botvinik- et al 2020

“Towards Reproducible Brain-Wide Association Studies”, et al 2020

“Can Short Psychological Interventions Affect Educational Performance? Revisiting the Effect of Self–Affirmation Interventions”, Serra- et al 2020

“Do police killings of unarmed persons really have spillover effects? Reanalyzing et al 2018”, 2019

“The statistical properties of RCTs and a proposal for shrinkage”, van et al 2020

“Off-target toxicity is a common mechanism of action of cancer drugs undergoing clinical trials”, et al 2019

“Two Researchers Challenged a Scientific Study About Violent Video Games—and Took a Hit for Being Right”; “Research linking violent entertainment to aggression retracted after scrutiny”

“RCTs to Scale: Comprehensive Evidence from Two Nudge Units”, Della2020

“Time to assume that health research is fraudulent until proven otherwise?”

“The Chrysalis Effect: How Ugly Initial Results Metamorphose Into Beautiful Articles”, et al 2014

“Putting the Self in Self-Correction: Findings From the Loss-of-Confidence Project”, et al 2021

“No effect of ‘watching eyes’: An attempted replication and extension investigating individual differences”, et al 2021

“The Role of Human Fallibility in Psychological Research: A Survey of Mistakes in Data Management”, et al 2021

“A pre-registered, multi-lab non-replication of the action-sentence compatibility effect (ACE)”, et al 2021

“This scientist accused the supplement industry of fraud. Now, his own work is under fire”

Pygmalion Effect

Pygmalion In The Classroom: Teacher Expectation and Pupil’s Intellectual Development, 1968; Experimenter Effects in Behavioral Research, 1976

“Reviews: Rosenthal, Robert, and Jacobson, Lenore; Pygmalion in the Classroom. 1968”, 1968; “But You Have To Know How To Tell Time”, 1969

“Unfinished Pygmalion”, 1969

“Five Decades Of Public Controversy Over Mental Testing”, 1975

“The Self-Fulfillment of the Self-Fulfilling Prophecy: A Critical Appraisal”, 1987a; “Does Research Count in the Lives of Behavioral Scientists?”, 1987b

“We’ve Been Here Before: The Replication Crisis over the ‘Pygmalion Effect’”

Datamining

Some examples of how ‘datamining’ or ‘data dredging’ can manufacture correlations on demand from large datasets by comparing enough variables:

Rates of autism diagnoses in children correlate with age—or should we blame organic food sales?; height & vocabulary or foot size & math skills may correlate strongly (in children); national chocolate consumption correlates with Nobel prizes12, as do borrowing from commercial banks & buying luxury cars & serial-killers/mass-murderers/traffic-fatalities13; moderate alcohol consumption predicts increased lifespan and earnings; the role of storks in delivering babies may have been underestimated; children and people with high self-esteem have higher grades & lower crime rates etc., so “we all know in our gut that it’s true” that raising people’s self-esteem “empowers us to live responsibly and that inoculates us against the lures of crime, violence, substance abuse, teen pregnancy, child abuse, chronic welfare dependency and educational failure”—unless perhaps high self-esteem is caused by high grades & success, boosting self-esteem has no experimental benefits, and may backfire?

Those last can be generated ad nauseam: Shaun Gallagher’s Correlated (also a book) surveys users & compares against all previous surveys with 1k+ correlations.

Tyler Vigen’s “spurious correlations” catalogues 35k+ correlations, many with r > 0.9, based primarily on US Census & CDC data.

Google Correlate “finds Google search query patterns which correspond with real-world trends” based on geography or user-provided data, which offers endless fun (“Facebook”/“tapeworm in humans”, r = 0.8721; “Superfreakonomic”/“Windows 7 advisor”, r = 0.9751; Irish electricity prices/“Stanford webmail”, r = 0.83; “heart attack”/“pink lace dress”, r = 0.88; US states’ parasite loads/“booty models”, r = 0.92; US states’ family ties/“how to swim”; metronidazole/“Is Lil’ Wayne gay?”, r = 0.89; Clojure/“prnhub”, r = 0.9784; “accident”/“itchy bumps”, r = 0.87; “migraine headaches”/“sciences”, r = 0.77; “Irritable Bowel Syndrome”/“font download”, r = 0.94; interest-rate-index/“pill identification”, r = 0.98; “advertising”/“medical research”, r = 0.99; Barack Obama 201214ya vote-share/“Top Chef”, r = 0.88; “losing weight”/“houses for rent”, r = 0.97; “Bieber”/tonsillitis, r = 0.95; …

And on less secular themes, do churches cause obesity & do Welsh rugby victories predict papal deaths?

Financial data-mining offers some fun examples; there’s the Super Bowl/stock-market one which worked well for several decades; and it’s not very elegant, but a 3-variable model (Bangladeshi butter, American cheese, joint sheep population) reaches R2=0.99 on 20 years of the S&P 500