Miscellaneous statistical stuff

- Critiques

- “Someone Should Do Something”: Wishlist of Miscellaneous Project Ideas

- Estimating Censored Test Scores

- The Traveling Gerontologist Problem

- Bayes Nets

- Genome Sequencing Costs

- Proposal: Hand-Counting Mobile App for More Fluid Group Discussions

- Air Conditioner Replacement

- Some Ways of Dealing With Measurement Error

- Value of Information: Clinical Prediction Instruments for Suicide

- Bayesian Model Averaging

- Dealing With All-Or-Nothing Unreliability of Data

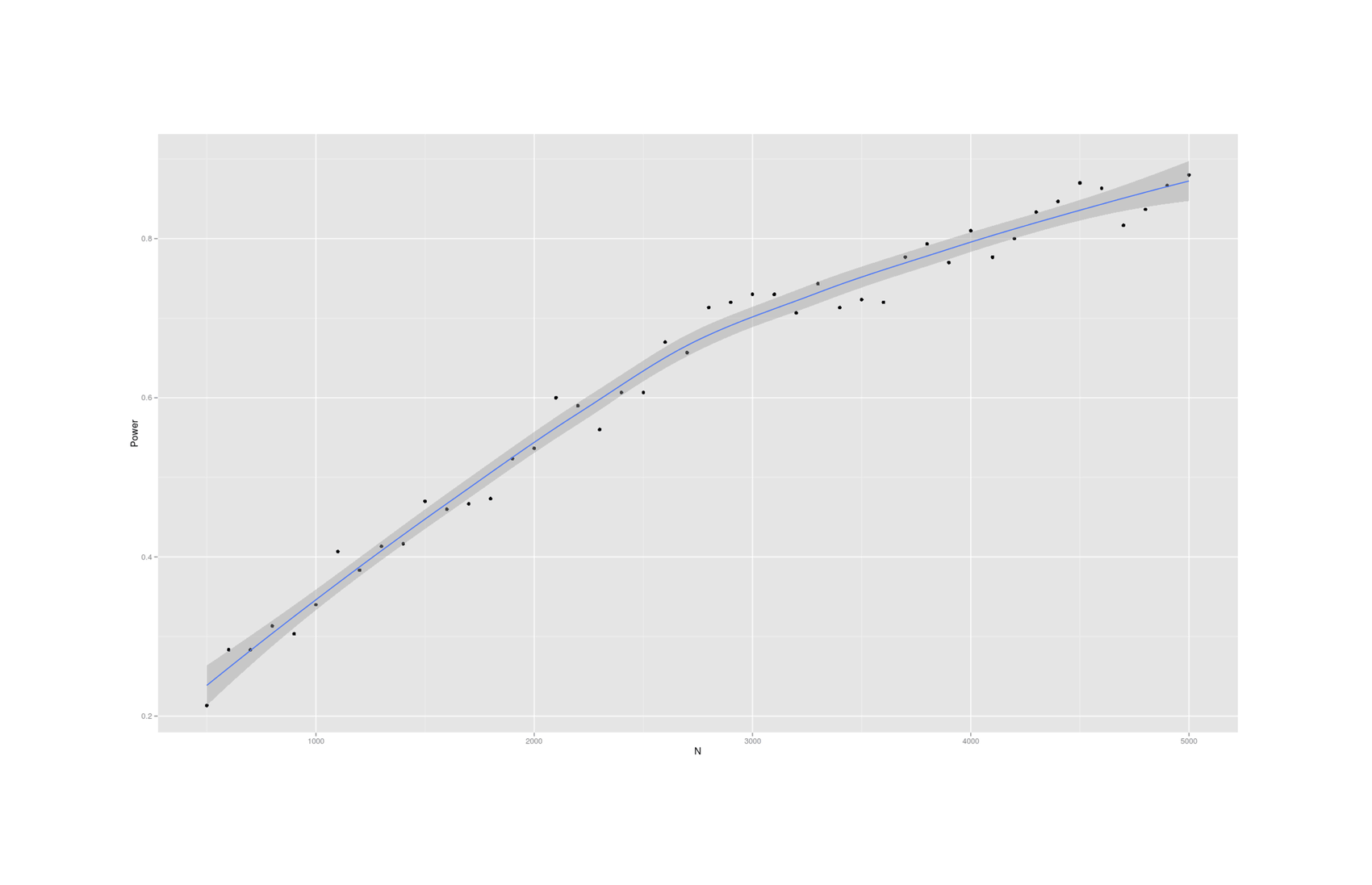

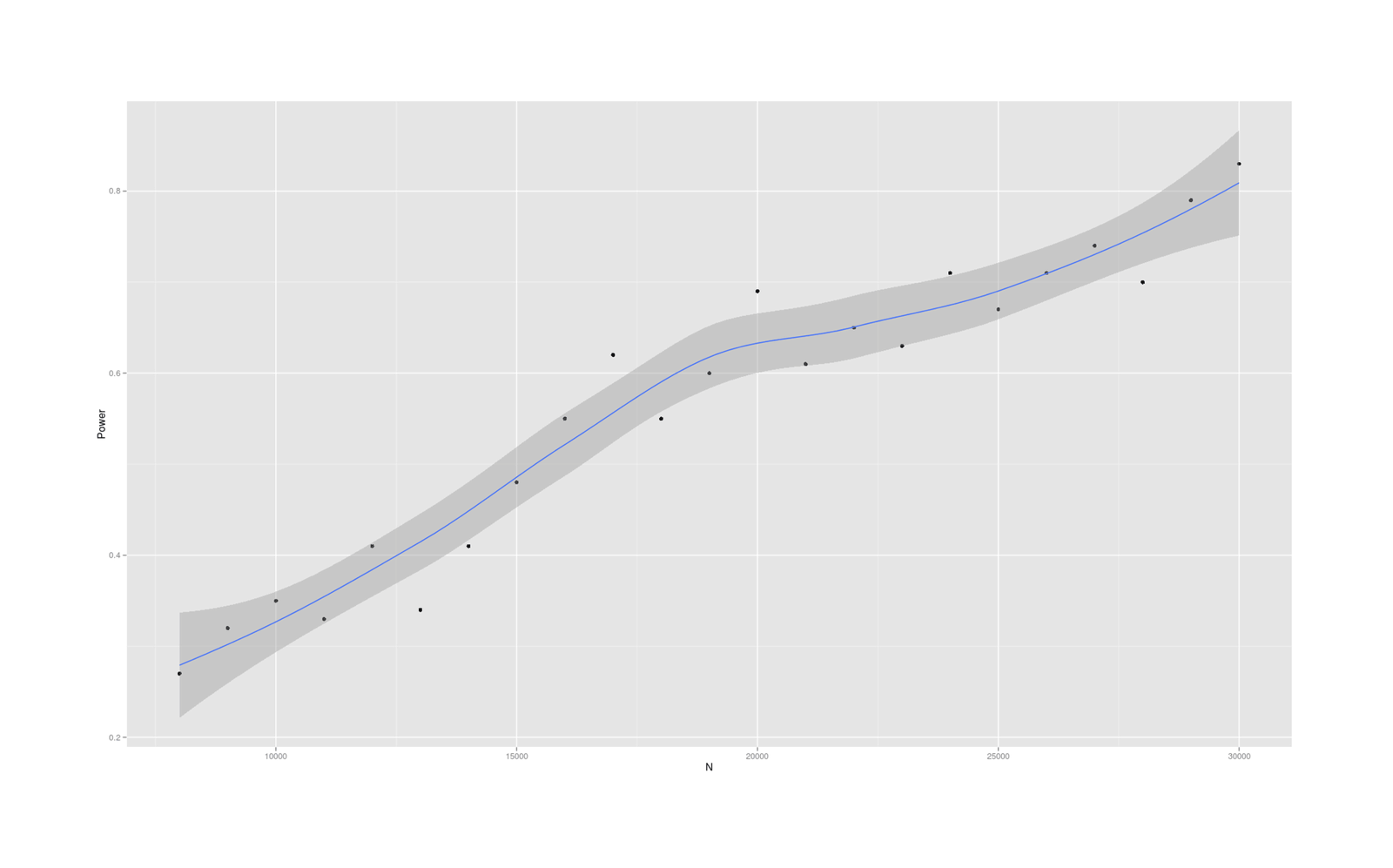

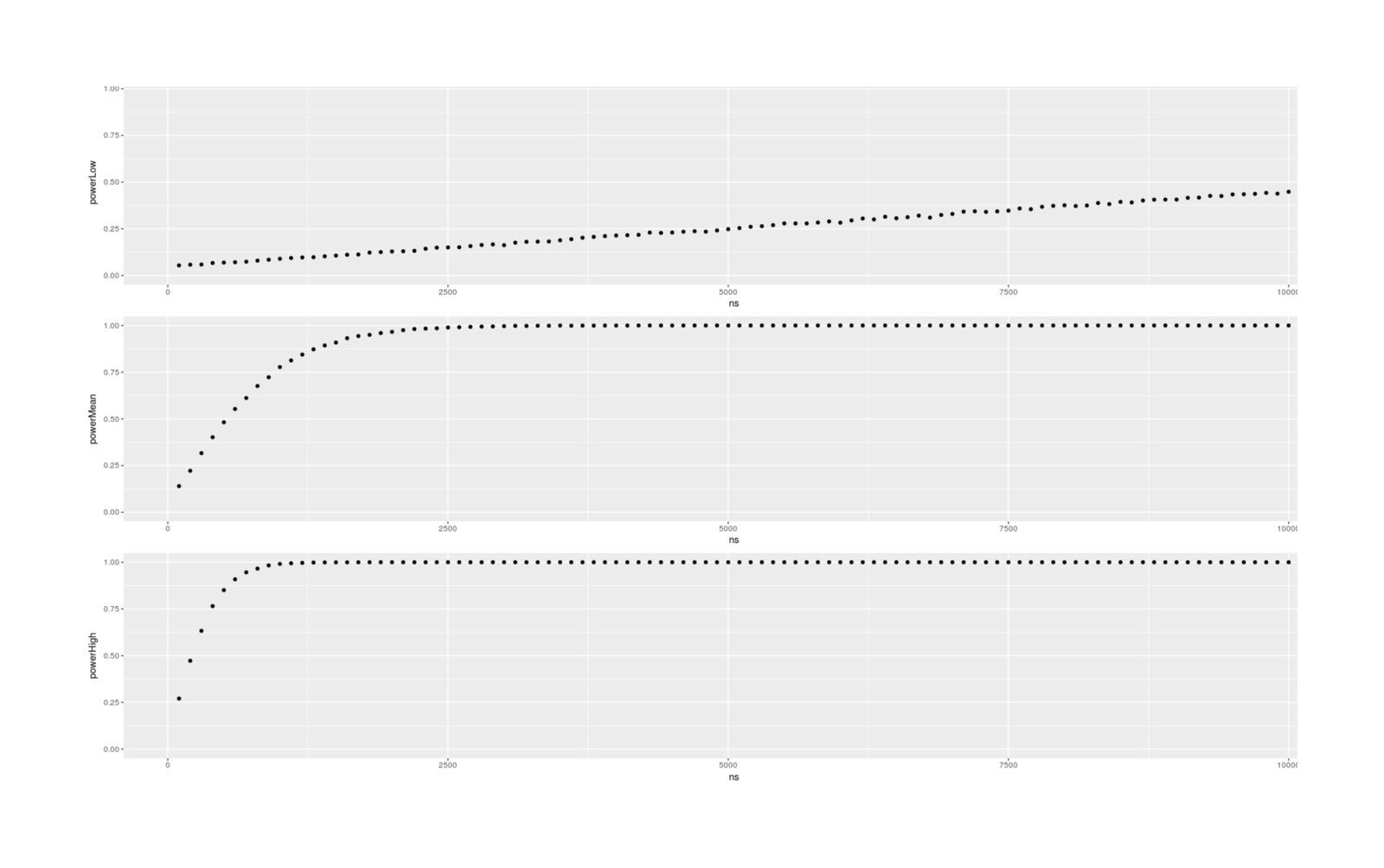

- Dysgenics Power Analysis

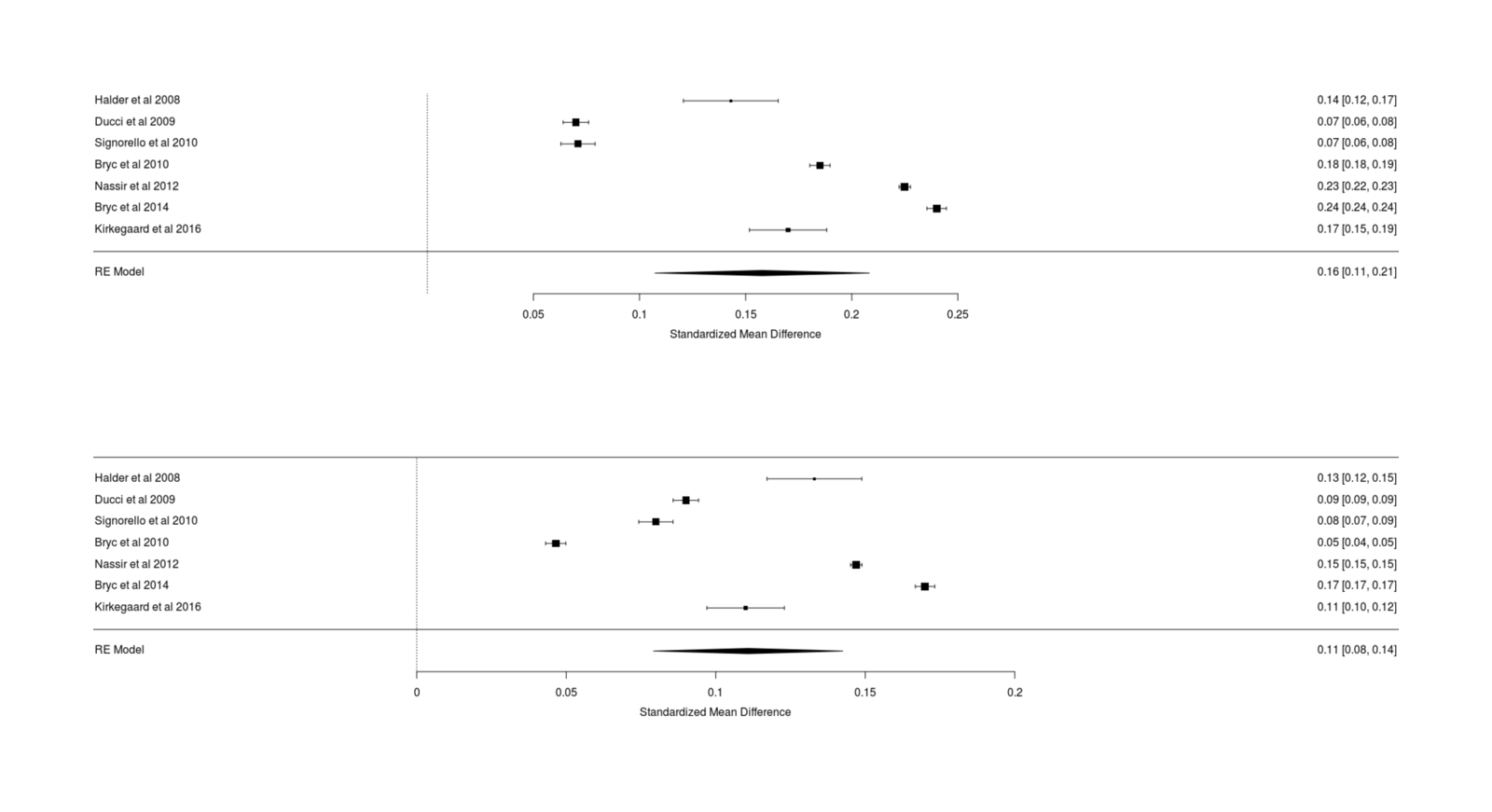

- Power Analysis for Racial Admixture Studies of Continuous Variables

- Operating on an Aneurysm

- The Power of Twins: Revisiting Student’s Scottish Milk Experiment Example

- RNN Metadata For Mimicking Individual Author Style

- MCTS

- Candy Japan A/B Test

- DeFries-Fulker Power Analysis

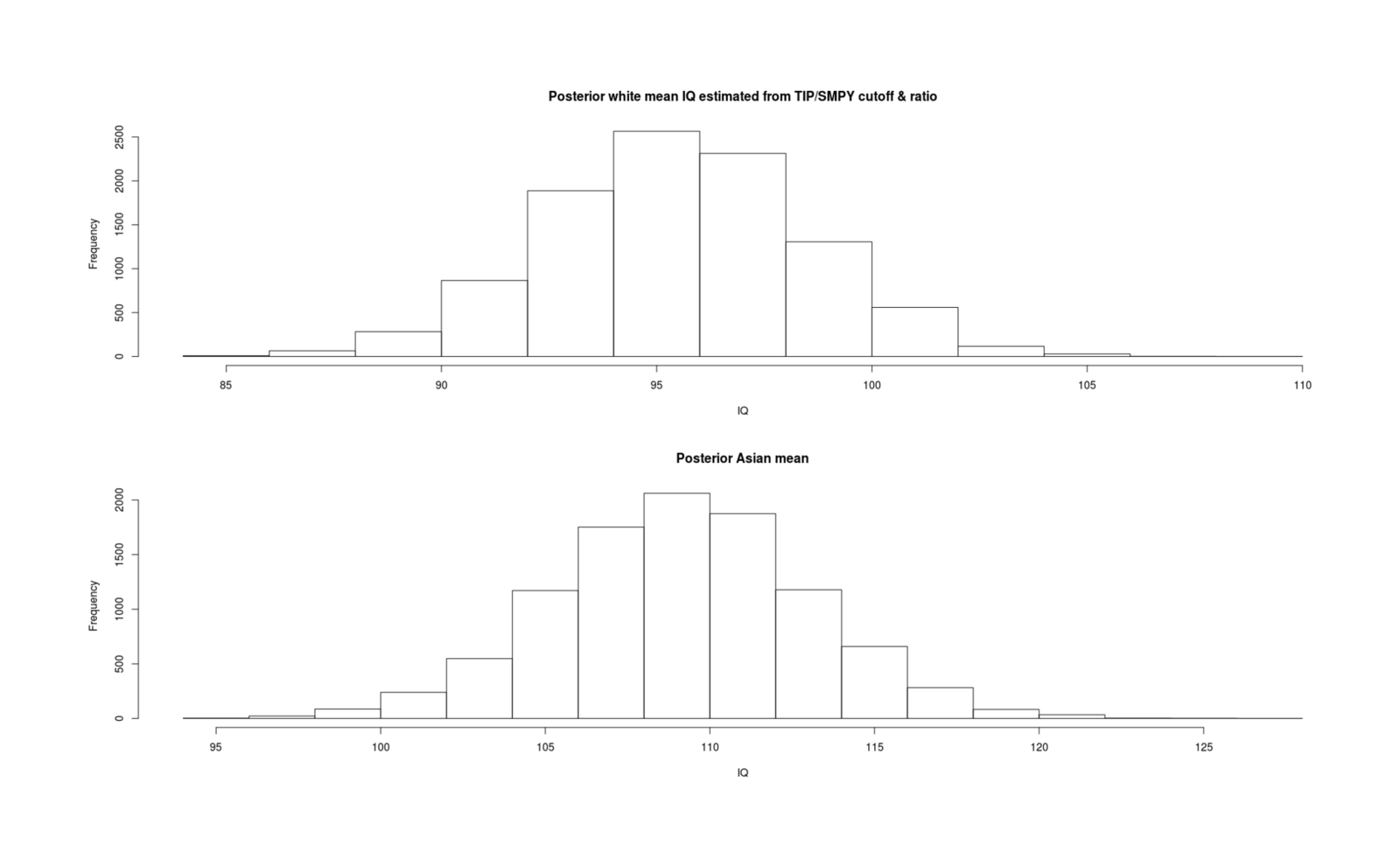

- Inferring Mean IQs From SMPY/TIP Elite Samples

- Genius Revisited: On the Value of High IQ Elementary Schools

- Great Scott! Personal Name Collisions and the Birthday Paradox

- Detecting Fake (human) Markov Chain Bots

- Optimal Existential Risk Reduction Investment

- Model Criticism via Machine Learning

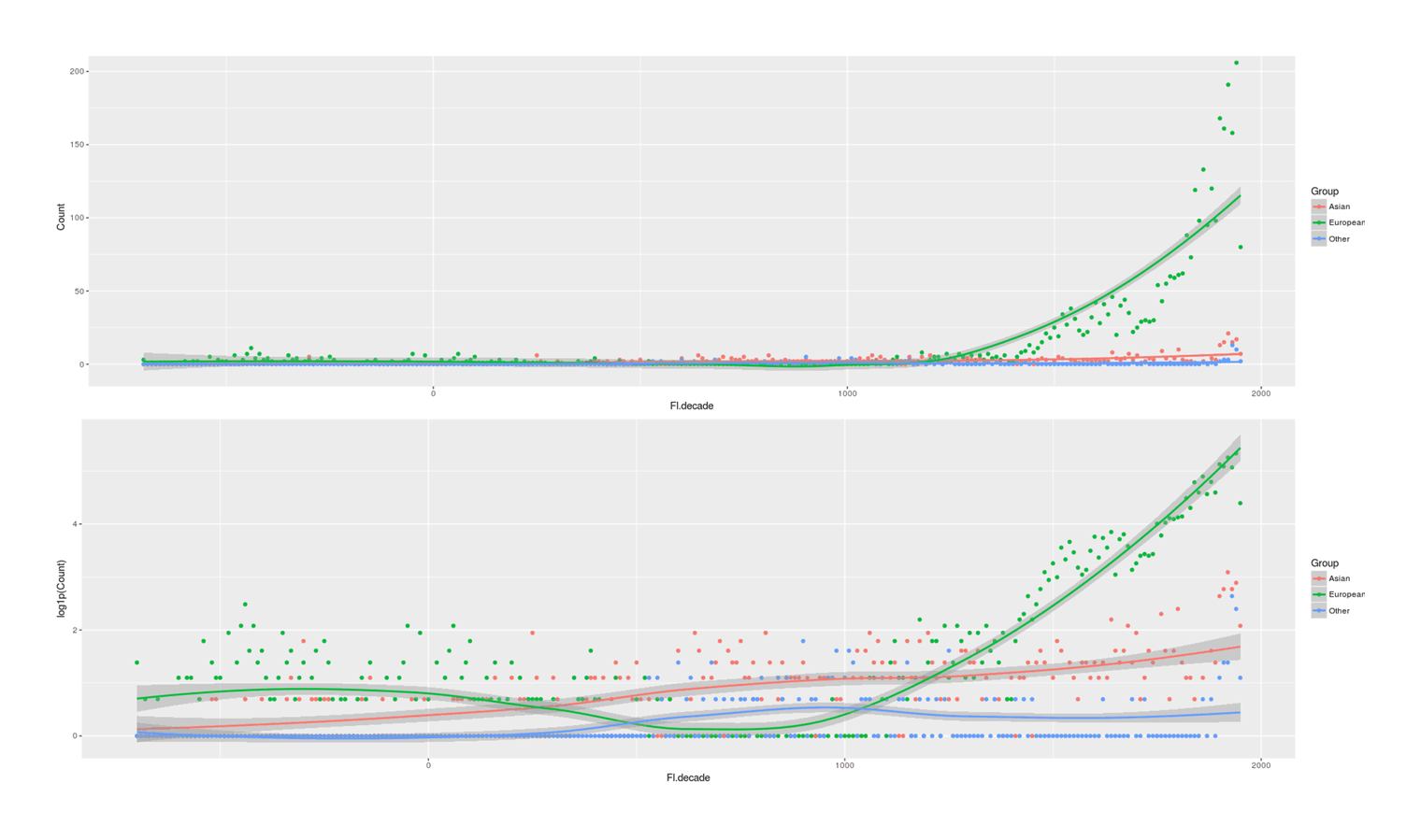

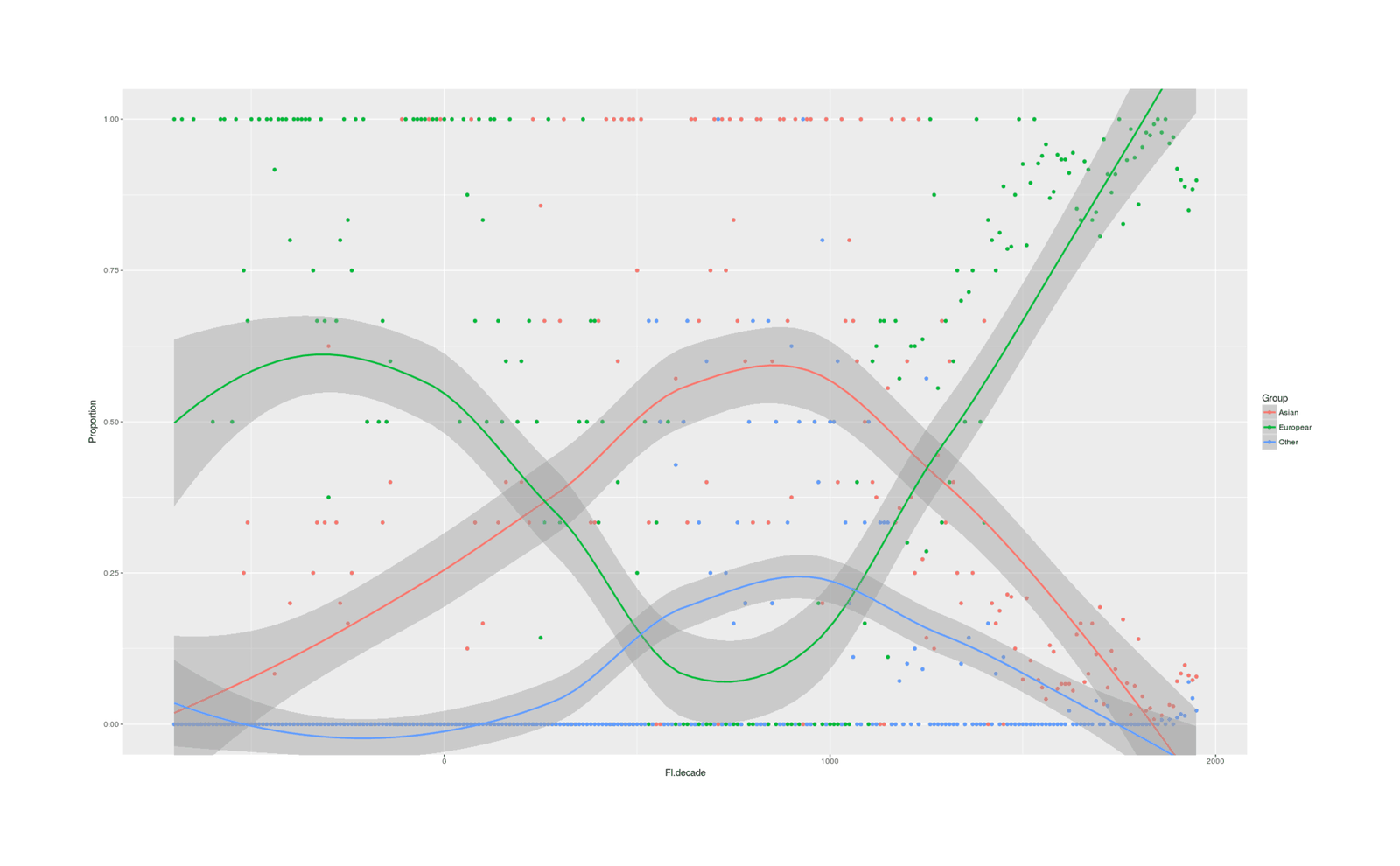

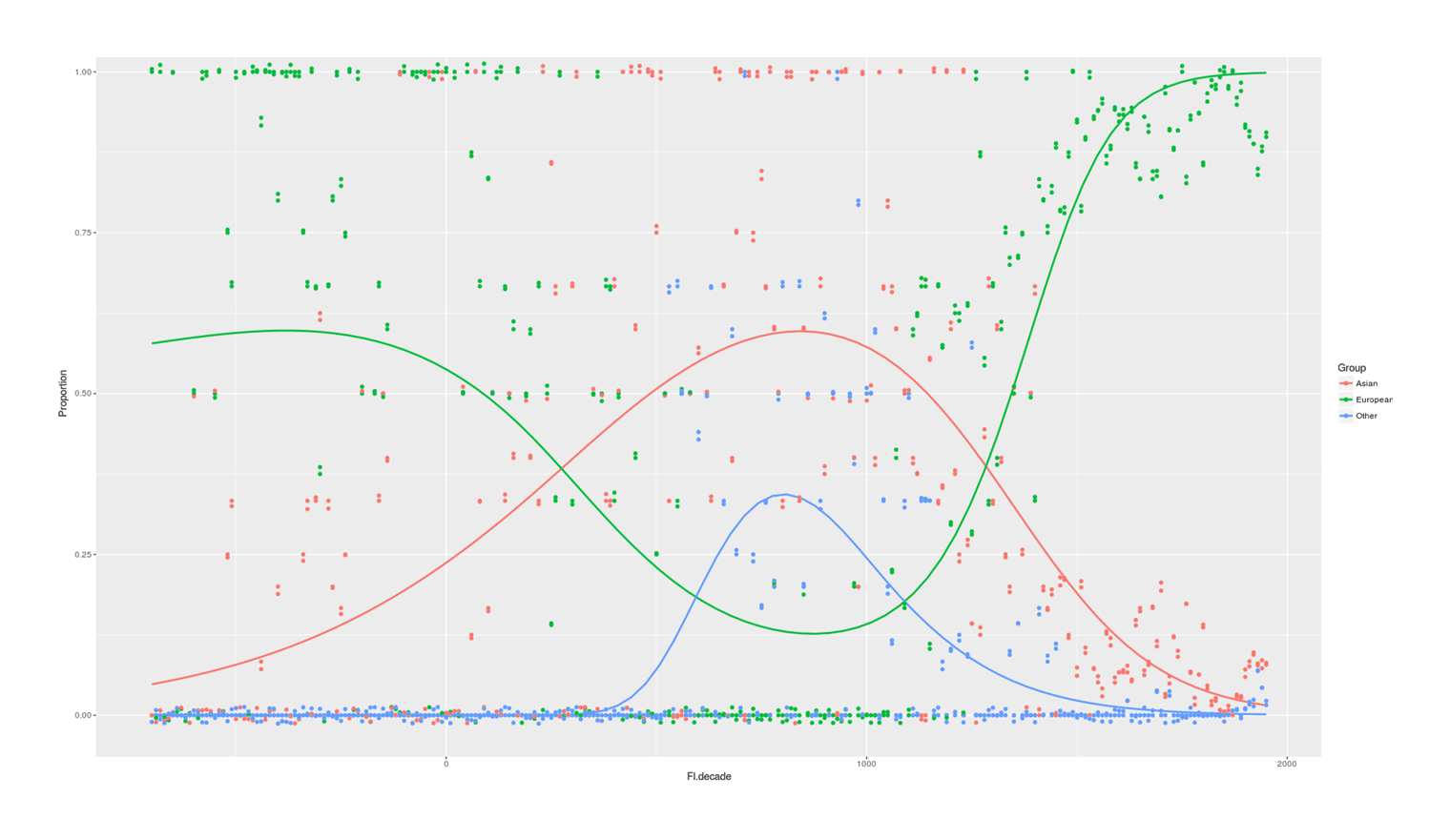

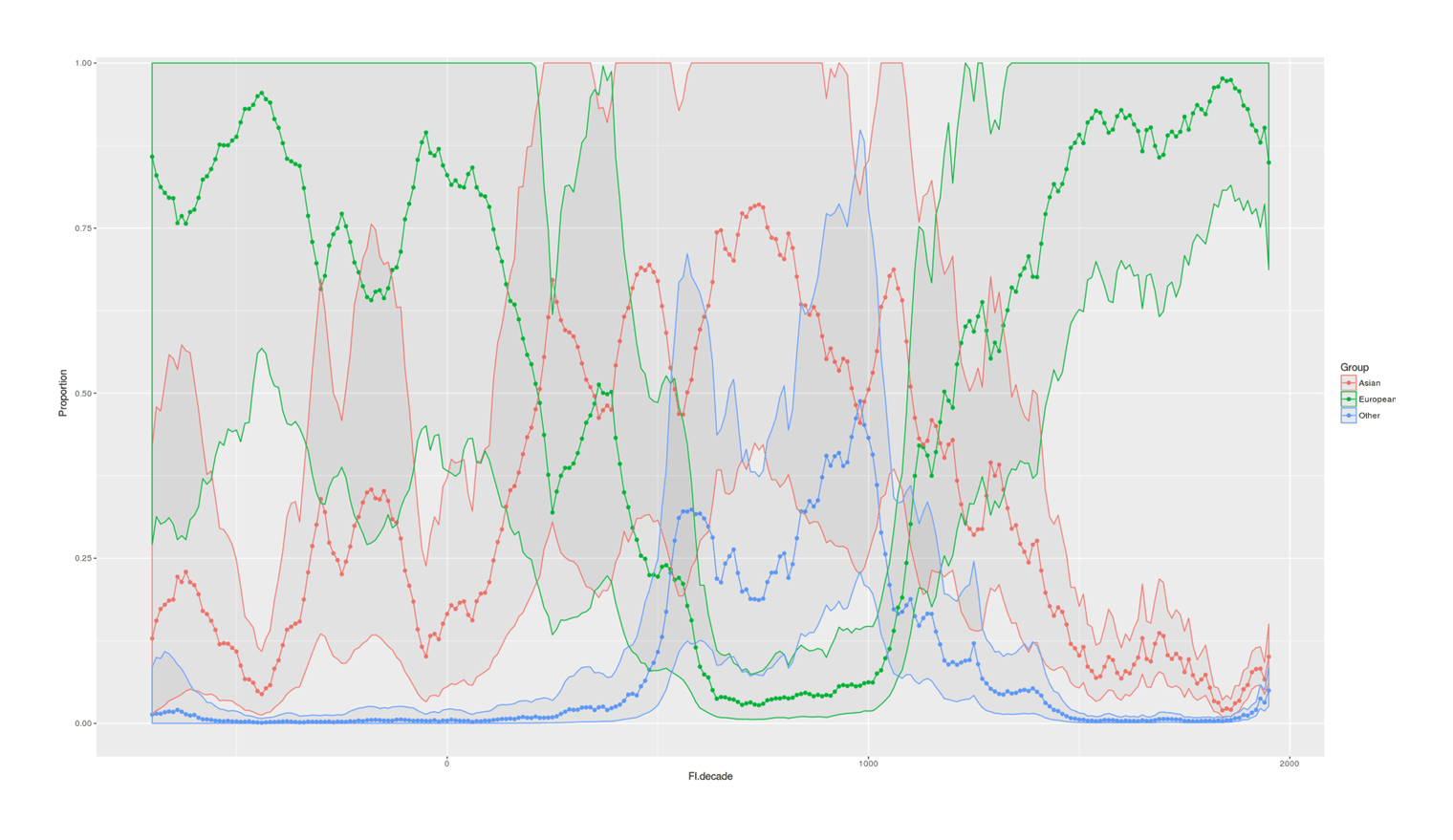

- Proportion of Important Thinkers by Global Region Over Time in Charles Murray’s Human Accomplishment

- Program for Non-Spaced-Repetition Review of past Written Materials for Serendipity & Rediscovery: Archive Revisiter

- On the Value of New Statistical Methods

- Bayesian Power Analysis: Probability of Exact Replication

- Expectations Are Not Expected Deviations and Large Number of Variables Are Not Large Samples

- Oh Deer: Could Deer Evolve to Avoid Car Accidents?

- Evolution As Backstop for Reinforcement Learning

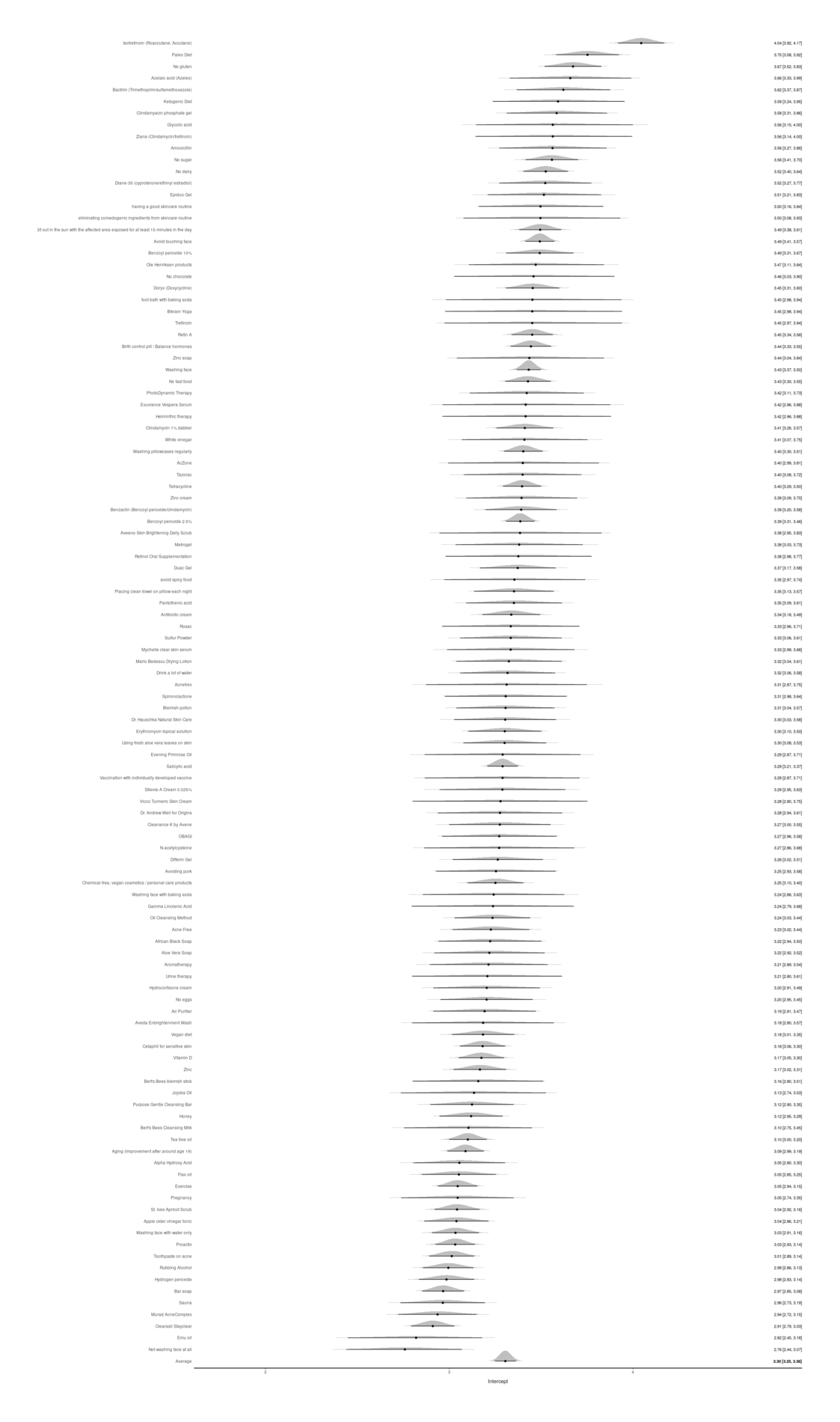

- Acne: a Good Quantified Self Topic

- Fermi Calculations

- Selective Emigration and Personality Trait Change

- The Most Abandoned Books on GoodReads

- Best Student Ever!

- Little’s Law in the Wild

Critiques

-

criticism of teeth-removal experiment in rats https://www.lesswrong.com/r/discussion/lw/kfb/open_thread_30_june_2014_6_july_2014/b1u3

-

criticism of small Noopept self-experiment http://www.bluelight.org/vb/threads/689936-My-Paper-quot-Noopept-amp-The-Placebo-Effect-quot?p=11910708&viewfull=1#post11910708

-

why Soylent is not a good idea https://www.lesswrong.com/lw/hht/link_soylent_crowdfunding/90y7

-

misinterpretation of fluoridation meta-analysis and ignorance of VoI http://theness.com/neurologicablog/index.php/anti-fluoride-propaganda-as-news/#comment-76400

-

https://www.lesswrong.com/lw/1lt/case_study_melatonin/8mgf

-

Fulltext: https://dl.dropboxusercontent.com/u/280585369/2014-dubal.pdf is this possible? http://nextbigfuture.com/2014/05/kl-vs-gene-makes-up-six-iq-points-of.html#comment-1376748788 https://www.reddit.com/r/Nootropics/comments/25233r/boost_your_iq_by_6_points/chddd7f

-

tACS causes lucid dreaming: https://www.reddit.com/r/LucidDreaming/comments/27y7n6/no_brain_stimulation_will_not_get_you_lucid/ck6isgo

-

Herbalife growth patterns: https://www.reddit.com/r/business/comments/24aoo2/what_unsustainable_growth_looks_like_herbalife/ch5hwtv

-

Plausible correlate of Fairtrade: https://www.reddit.com/r/Economics/comments/26jb2d/surprise_fairtrade_doesnt_benefit_the_poor/chrx9s4

-

slave whippings vs cotton production https://www.lesswrong.com/r/discussion/lw/kwc/open_thread_sept_17_2014/bajv

-

whether a study on mental illness & violence shows schizophrenics are not more likely to murder but rather be murdered: https://www.reddit.com/r/psychology/comments/2fwjs8/people_with_mental_illness_are_more_likely_to_be/ckdq50k / http://www.nationalelfservice.net/publication-types/observational-study/people-with-mental-illness-are-more-likely-to-be-victims-of-homicide-than-perpetrators-of-homicide/#comment-95507 (see also http://slatestarscratchpad.tumblr.com/post/120950150581/psycholar-giraffepoliceforce-museicetc https://www.reddit.com/r/slatestarcodex/comments/744rqn/violence_is_not_a_product_of_mental_illness/dnwb1kj/ )

-

Fortune analysis of higher female CEO returns https://www.lesswrong.com/r/discussion/lw/l3b/contrarian_lw_views_and_their_economic/bftw

-

algae/IQ: https://www.lesswrong.com/r/discussion/lw/l9v/open_thread_nov_17_nov_23_2014/bm7o

-

synaesthesia/IQ: https://www.reddit.com/r/psychology/comments/2mryte/surprising_iq_boost_12_in_average_by_a_training/cm760v8

-

misinterpretation: https://slatestarcodex.com/2014/12/08/links-1214-come-ye-to-bethlinkhem/#comment-165197

-

underpowered/multiple-correction jobs program: https://slatestarcodex.com/2014/12/08/links-1214-come-ye-to-bethlinkhem/#comment-165197

-

claimed fall in digit span backwards minuscule and non-statistically-significant, no evidence of heterogeneity beyond variability due to sample size https://drjamesthompson.blogspot.com/2015/04/digit-span-bombshell.html?showComment=1428096775425#c4097303932864318518

-

Claimed randomized experiment of whether sushi tastes worse after freezing is not actually a randomized experiment https://www.reddit.com/r/science/comments/324xmf/randomized_doubleblind_study_shows_the_quality_of/cq8dmsb

-

sexual openness result undermined by ceiling effect http://mindhacks.com/2015/04/28/when-society-isnt-judging-womens-sex-drive-rivals-mens/#comment-362749

-

music study claiming WM interaction: possible ceiling effect? see FB PM

-

attempt to measure effect of Nazi anti-schizophrenia eugenics program failed to use breeder’s equation to estimate possible size of effect, which is too small to detect with available data and hence attempt is foredoomed: https://rpubs.com/JLLJ/GSCZ

-

claim high IQ types almost 100% failure rates due to inappropriate model assumption of normal distribution + narrow standard deviation: https://polymatharchives.blogspot.com/2015/01/the-inappropriately-excluded.html?showComment=1441741719623#c1407914596750199739

-

implausible claims about success rate of facial recognition applied to St Petersburg population: https://news.ycombinator.com/item?id=11491264 (see also “Facial recognition systems stumble when confronted with million-face database”)

-

human Toxoplasma gondii study is not well-powered as authors claim due to incorrect power analysis, and results are evidence for harm: https://blogs.discovermagazine.com/neuroskeptic/2016/02/20/myth-mind-altering-parasite-toxoplasma-gondii/#comment-2755778490 ; https://www.reddit.com/r/slatestarcodex/comments/5vjrmo/toxoplasma_doesnt_cause_adolescent_psychosis/de2x4kh/

-

attempt at attributing Bitcoin price increases to technology improvements: https://www.reddit.com/r/Bitcoin/comments/5tbt8f/buzz_factor_or_innovation_potential_what_explains/ddlmzrz/

-

analysis of designer drug/research chemical activity on Wikipedia is driven almost entirely by editing patterns of just 2 Wikipedia editors particularly interested in the topic: http://calib.ro/chemical-wiki/explorations/2016-09-12-emcdda-watchlist-and-wikipedia-timeline#comment-3277669328

-

failure to use mediation SEM, difference-in-statistical-significance-is-not-a-significant-difference: https://www.reddit.com/r/slatestarcodex/comments/6qwb0q/critical_thinking_skills_are_more_important_than/dl51ubw/

-

Neanderthal ancestry percentage & autism: https://www.reddit.com/r/slatestarcodex/comments/74fevz/findings_suggest_that_high_levels_of_neanderthal/dny3sh9/

-

Anime image classification project likely undone by using non-i.i.d. images

Failed Facebook Critiques

-

Facebook emotion study: https://www.reddit.com/r/psychology/comments/29vg9j/no_emotions_arent_really_contagious_over_facebook/cip7ln5

A reply to http://www.ischool.berkeley.edu/newsandevents/news/20140828facebookexperiment (too long for inclusion in https://news.ycombinator.com/item?id=8378743 )

91 points and no comments? OK, I guess it falls to me to jump on this grenade.

So why is the Facebook study bad science? After 5 screens of meandering anecdotes, insinuations, insults, etc we finally get to a real criticism:

Did people feel betrayed about the lack of informed consent? You know, in psychology research, when people find out they’ve been an unwitting experimental subject, it’s not uncommon for them to feel duped. They’re at least surprised. The only distinction is that academics who experiment on subjects without getting their consent first usually tell people about it immediately afterward. They debrief the subjects and answer questions. They unruffle ruffled feathers. They may allow a subject to remove his or her data from the experiment. In some cases, they even offer follow-up services. Given that Facebook did nothing to inform subjects or make them feel whole again, it’s hard to blame folks for feeling unduly violated.

So? As was pointed out, these experiments are run all the time by all sorts of entities, and by making this criticism you are implicitly arguing that it would be better for Facebook to keep the results secret (like companies usually do) instead of informing us about very relevant results in the brave new world of the Internet. Far from arguing for good science, OP is arguing for bad science as somehow ‘ethical’. (This is quite aside from the issue that informed consent makes no sense and was a kneejerk reaction to abuses that didn’t need the invention of scholastic concepts like ‘informed consent’.)

The experiment also forced many people to contemplate, for the first time, the kind of persuasive power Facebook might surreptitiously wield around the world given its size and scale.

Also not a reason for it being ‘bad science’.

On the other side of the firestorm were people who couldn’t see how the experiment was any different from your run-of-the-mill psychology experiment. Or, alternatively, how it was different from the widespread Internet practice of A/B testing, where you experiment with different variations of a website to see which is most effective at persuading visitors to buy, or download, or whatever the site’s goal is. Some of these experiments feel blatantly manipulative, like the headlines that are constantly tested and retested on visitors to see which ones will get them to click. We have a word for headlines like this: “click-bait.” But nobody ever hands out consent forms.

Oh good, so the author isn’t a complete idiot.

The every-which-way quality of the reaction, I think, comes in part from the fact that the study crossed academic and corporate boundaries, two areas with different ethical standards. It was unclear which to hold the company to.

Wait, what? What happened to all the bloviating earlier about the lack of consent? Now the problem is it ‘crosses boundaries’? WTF. Also: still nothing about how this was ‘bad science’.

If you were a researcher at Facebook, probably one of the things that would provide you with the greatest source of tension about your job would be evidence that the product you’re pushing to half the world’s population actually causes them to feel “negative or left out.” That would be a pretty epic fail for a company that wants to “make the world more open and connected.”

I believe that Kramer is concerned with addressing the popular worry that Facebook makes us unhappy. Not just because I’ve met him but because, in the study, he seems adamant about refuting it. In discussing his findings, Kramer asserts that the study “stands in contrast to theories that suggest viewing positive posts by friends on Facebook may somehow affect us negatively, for example, via social comparison.”

…[long description of ‘social comparison’ which I’m not sure why is in there since the experiment in question strongly suggests it’s not relevant]

Yes, it would suck if that were true and would undermine Facebook’s value, so kudos to Facebook for not hiding its head under a rock and experimenting to find out the truth. Kudos… wait, I forgot, this is ‘lousy social science’ we’re supposed to be booing and hissing about.

In fact, social comparison is often posited as the solution to what’s known as the “Easterlin Paradox,” which finds that, while our happiness increases with our income, societies that get richer do not tend to get happier.

Actually, if you look at the graphs, they do tend to get happier it’s just there’s severe diminishing returns and the graph looks logarithmic rather than linear. Minor point, but it annoys me to think that being wealthier doesn’t help. It does.

[another 5 screens of meandering somewhat related speculation]

In fact, she finds that greater passive consumption over time, controlling for individual predispositions, is associated with lower perceived social support, lower bridging social capital (feeling part of a broader community), and marginally lower positive affect, higher depression, and higher stress.

Gee, I wonder why that might be… No, let’s jump to the insinuation that Facebook causes the higher depression etc. Yeah, that’s plausible.

The first question about the study is whether anything notable happened. This was a common criticism. Although Facebook has tremendous scale, it doesn’t mean the scientific community should care about every effect the company can demonstrate. Neither should the company itself work on small stuff that barely moves the needle. Though Kramer said he removed a lot of emotion from users’ News Feeds (between 10–90% of positive or negative posts), he saw very little change in the emotions users subsequently expressed. All of the changes were 0.1% or less. That’s not 10% or 1% — that’s 0.1%….Still, the small effects raise important questions. Why were they so small?

Bzzt. First hard scientific criticism, and they failed. The reason the effects were small were, as the paper explicitly discusses (OP did read the paper, right? The whole thing? Not just blogs and media coverage?), the intervention was designed to be small (that’s real ethics for you, not bullshit about informed consent), the intervention only affects one of several news sources each user is exposed to (decreasing the intervention still more), and the measure of mood in subsequent items is itself a very noisy measure (measurement error biases the effect downwards). The results are exactly as one would expect and this is an invalid experiment. http://psychcentral.com/blog/archives/2014/06/23/emotional-contagion-on-facebook-more-like-bad-research-methods/ makes the same mistake. The description of how much was removed is also wrong; here’s a quote from the paper:

Two parallel experiments were conducted for positive and negative emotion: One in which exposure to friends’ positive emotional content in their News Feed was reduced, and one in which exposure to negative emotional content in their News Feed was reduced. In these conditions, when a person loaded their News Feed, posts that contained emotional content of the relevant emotional valence, each emotional post had between a 10% and 90% chance (based on their User ID) of being omitted from their News Feed for that specific viewing. It is important to note that this content was always available by viewing a friend’s content directly by going to that friend’s “wall” or “timeline,” rather than via the News Feed. Further, the omitted content may have appeared on prior or subsequent views of the News Feed. Finally, the experiment did not affect any direct messages sent from one user to another…Both experiments had a control condition, in which a similar proportion of posts in their News Feed were omitted entirely at random (ie. without respect to emotional content). Separate control conditions were necessary as 22.4% of posts contained negative words, whereas 46.8% of posts contained positive words. So for a person for whom 10% of posts containing positive content were omitted, an appropriate control would withhold 10% of 46.8% (ie. 4.68%) of posts at random, compared with omitting only 2.24% of the News Feed in the negativity-reduced control.

Note the difference between writholding ‘4.68% of posts’ or ‘2.24% of the News Feed’ and OP’s desccription as removing ‘between 10-90% of positive or negative posts’.

Words were determined to be positive or negative using a dictionary provided by the Linguistic Inquiry and Word Count software, known as LIWC, last updated in 2007. About 47% of posts in the experiment contained positive words while about 22% of posts contained negative words, leaving 31% of posts with no emotional words at all, as defined by LIWC. Everything but the text of the posts was discarded for this analysis, including photos.

The third study, which looks at the contagion of negative emotion in instant messaging, finds that LIWC actually cannot tell the difference between groups sharing negative vs. neutral emotions.

Protip: don’t cite broken links like http://dbonline.igroupnet.com/ACM.TOOLS/Rawdata/Acm1106/fulltext/1980000/1979049/p745-guillory.pdf without any other citation data. I can’t figure out what this study is supposed to be but given that the link is broken despite the blog post being written barely a month or two ago, I suspect OP is misrepresenting it.

Looking more broadly, one study compares a number of similar techniques and finds that LIWC is a middling performer, at best. It is consistently too positive in its ratings, even labeling the conversation in social media around the H1N1 disease outbreak as positive overall. Another study that looks at emotional contagion in instant messaging finds that, even when participants have been induced to feel sad, LIWC still thinks they’re positive.

Good thing the experiment tested multiple conditions and found similar results.

Used in raw form as in the Facebook experiment, however, it appears to be substantially inferior to machine learning.

Sure. But should we let the perfect be the enemy of better?

Further, we know next to nothing about how well LIWC performs in social media when it comes to emotions under the big headings of positive and negative emotion. If it detects some negative emotions, like anger, better than others like sadness this too may bias what we learn from the Facebook experiment.

Yes, maybe the LIWC works well in these circumstances, maybe it doesn’t. Who knows? One could write this of any instrument or analysis being applied in a new situation. I hear the philosophers call this ‘the problem of induction’; maybe they have a solution.

In a word: no. Facebook posts are likely to be a highly biased representation of how Facebook makes people feel because Facebook posts are a highly biased representation of how we feel in general…Looking at social situations in general, we know for example that there are powerful pressures to conform to the attitudes, feelings and beliefs of others. And so if we look at Facebook from this standpoint, it’s easy to see how the effects reported in the Facebook experiment might be due to conformity rather than genuine emotional contagion. Consciously or unconsciously, we may sense a certain emotional tone to our News Feeds and therefore adapt what we post, ever so slightly, so that we don’t stick out too much.

Oh for heaven’s sake. So if they had found a ‘social comparison’ effect, then that’s proof of social comparison; and if they didn’t, well, that’s OK because ‘Facebook posts are likely to be highly biased’ and it’s all due to conformity! Way to explain every possible outcome there, OP. Just being biased doesn’t mean you can’t randomize interventions and learn.

Experience sampling involves randomly interrupting people as they go about their lives to ask how they’re feeling in the moment. It’s private, so it’s less subject to social biases. It does not rely on recollections, which can be off. And it solicits experiences evenly across time, rather than relying on only the moments or feelings people think to share.

But wait! I thought we ‘consciously or unconsciously’ self-censored, and “If we censor fully a third of what we want to express at the last minute, how much are we censoring before we even reach for the keyboard? [to report an experience sample]”? So the question is which source of bias do we prefer: people knowing they’re in an experiment and responding to perceived experimenter demands, or people not knowing and going about life as normal? I know which I prefer, especially since the research has actually been done…

…

Oh my god, it just keeps going on and on doesn’t it? Dude really likes experience sampling, but I’m thinking he needs to write more concisely. OK, I’m going to wrap up here because I’d like to read something else today. Let’s summarize his complaints and my counter-objections:

-

no consent: irrelevant to whether this was good science or ‘lousy social science’

-

crossed boundaries between corporations and academia: likewise irrelevant; also, welcome to the modern Internet

-

small effect size: misunderstood the statistical design of study and why it was designed & expected to have small effects

-

used LIWC with high error rate for measuring emotionality of posts: if random error, biases effect to zero and so is not an argument against statistically-significant findings

-

and LIWC may have systematic error towards positivity: apparently not an issue as negative & positive conditions agreed, and the studies he cites in support of this claim are mixed or unavailable

-

also, other methods are better than LIWC: sure. But that doesn’t mean the results are wrong

-

maybe LIWC has large unknown biases applied to short social media texts: possible, but it’s not like you have any real evidence for that claim

-

Facebook news posts are a biased source of mood anyway: maybe, but they still changed after random manipulation

-

experience sampling is sooooooo awesome: and also brings up its own issues of biases and I don’t see how this would render the Facebook study useless anyway even if we granted it (like complaints #1, 2, 6, 7)

Now, I don’t want to overstate my criticisms here. The author has failed to show the Facebook study is worthless (I’d wager much more money on the Facebook results replicating than 95% of the social science research I’ve read) and it would be outright harmful for Facebook to aim for large effect sizes in future studies, but he does at least raise some good points about improving the followup work: Facebook certainly should be providing some of its cutting-edge deep networks for sentiment analysis for research like this after validating them if it wants to get more reliable results, and it would be worthwhile to run experience sampling approaches to see what happens there, in addition to easier website tests (in addition, not instead of).

Correlation=Causation in Cancer Research

Failed attempt at estimating P(causation|correlation):

How often does correlation=causality? While I’m at it, here’s an example of how not to do it… “A weight of evidence approach to causal inference”, Swaen & van 2009:

Objective: The Bradford Hill criteria are the best available criteria for causal inference. However, there is no information on how the criteria should be weighed and they cannot be combined into one probability estimate for causality. Our objective is to provide an empirical basis for weighing the Bradford Hill criteria and to develop a transparent method to estimate the probability for causality. Study Design and Setting: All 159 agents classified by International Agency for Research of Cancer as category 1 or 2A carcinogens were evaluated by applying the nine Bradford Hill criteria. Discriminant analysis was used to estimate the weights for each of the nine Bradford Hill criteria.

Results: The discriminant analysis yielded weights for the nine causality criteria. These weights were used to combine the nine criteria into one overall assessment of the probability that an association is causal. The criteria strength, consistency of the association and experimental evidence were the three criteria with the largest impact. The model correctly predicted 130 of the 159 (81.8%) agents. Conclusion: The proposed approach enables using the Bradford Hill criteria in a quantitative manner resulting in a probability estimate of the probability that an association is causal.

Sounds reasonable, right? Take this IARC database, presumably of carcinogens known to be such by randomized experiment, and see how well the correlate studies predict after training with LDA - you might not want to build a regular linear model because those tend to be weak and not too great at prediction rather than inference. It’s not clear what they did to prevent overfitting, but reading through, something else strikes me:

The IARC has evaluated the carcinogenicity of a substantial number of chemicals, mixtures, and exposure circumstances. These evaluations have been carried out by expert interdisciplinary panels of scientists and have resulted in classification of these agents or exposure conditions into human carcinogens (category 1) probable human carcinogens (category 2A), possible human carcinogens (category 2B), not classifiable agents (category 3), and chemicals that are probably not carcinogenic to humans (category 4) (IARC, 2006). Although the IARC Working Groups do not formally use the Bradford Hill criteria to draw causal inferences many of the criteria are mentioned in the individual reports. For instance, the preamble specifically mentions that the presence of a dose-eresponse is an important consideration for causal inference. In this analysis, the IARC database serves as the reference database although we recognize that it may contain some disputable classifications. However, to our knowledge there is no other database containing causal inferences that were compiled by such a systematic process involving leading experts in the areas of toxicology and epidemiology.

Wait.

These evaluations have been carried out by expert interdisciplinary panels of scientists and have resulted in classification of these agents or exposure conditions into human carcinogens

evaluations have been carried out by expert interdisciplinary panels

IARC Working Groups do not formally use the Bradford Hill criteria to draw causal inferences many of the criteria are mentioned

Wait. So their database with causality/non-causality classifications is… based on… opinion. They got some experts together and asked them.

And the experts use the same criterion which they are using to predict the classifications.

What. So it’s circular. Worse than circular, randomization and causality never even enter the picture. They’re not doing ‘causal inference’, nor are they giving an ‘overall assessment of the probability that an association is causal’. And their conclusion (“The proposed approach enables using the Bradford Hill criteria in a quantitative manner resulting in a probability estimate of the probability that an association is causal.”) certainly is not correct - at best, they are predicting expert opinion (and maybe not even that well), they have no idea how well they’re predicting causality.

But wait, maybe the authors aren’t cretins or con artists, and have a good justification for this approach, so let’s check out the Discussion section where they discuss RCTs:

Using the results from randomized controlled clinical trials as the gold standard instead of the IARC database could have been an alternative approach for our analysis. However, this alternative approach has several disadvantages. First, only a selection of risk factors reported in the literature have been investigated by means of trials, certainly not the occupational and environmental chemicals. Second, there are instances in which randomized trials have yielded contradictory results, for instance, in case of several vitamin supplements and cancer outcomes.

You see, randomized trials are bad because sometimes we haven’t done them but we still really really want to make causal inferences so we’ll just pretend we can do that; and sometimes they disagree with each other and contradict what we epidemiologists have already proven, while the experts & IARC database never disagrees with themselves! Thank goodness we have official IARC doctrine to guide us in our confusion…

This must be one of the most brazen “it’s not a bug, it’s a feature!” moves I’ve ever seen. Mon chapeau, Gerard, Ludovic; mon chapeau.

Incidentally, Google Scholar says this paper has been cited at least 40 times; looking at some, it seem the citations are generally all positive. These are the sort of people deciding what’s a healthy diet and what substances are dangerous and what should be permitted or banned.

Enjoy your dinners.

Aerobic vs Weightlifting

Aerobic vs weightlifting exercise claims: multiple problems but primarily p-hacking, difference-in-statistical-significance-is-not-a-significant-difference, and controlling for intermediate variable.

…For example, weightlifting enhances brain function, reverses sarcopenia, and lowers the death rate in cancer survivors. Take this last item, lowering death rate in cancer survivors: garden-variety aerobic exercise had no effect on survival, while resistance training lowered death rates by one third… –http://roguehealthandfitness.com/case-for-weightlifting-as-anti-aging/

[paper in question: “The Effect of Resistance Exercise on All-Cause Mortality in Cancer Survivors”, et al 2014; https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4126241/ ]

This is a bad study, but sadly the problems are common to the field. Claiming that this study shows ‘weight lifting lowered death rates and aerobic exercise did not change survival’ is making at least 4 errors:

-

correlation!=causation; this is simply your usual correlation study (you know, of the sort which is always wrong in diet studies?), where you look at some health records and crank out some p-values. There should be no expectation that this will prove to be causally valid; in particular, reverse confounding is pretty obvious here and should remind people of the debate about weight and mortality. (Ah, but you say that the difference they found between aerobic and resistance shows that it’s not confounding because health bias should operate equally? Well, read on…)

-

power: with only 121 total deaths (~4% of the sample), this is inadequate to detect any differences but comically large correlates of health, as the estimate of predicting a third less mortality indicates

-

p-hacking/multiplicity, type S errors, exaggeration factor: take a look at that 95% confidence interval for resistance exercise (which is the only result they report in the abstract), which is an HR of 0.45-0.99. In other words, if the correlate were even the tiniest bit bigger, it would no longer have the magical ‘statistical-significance at p<0.05’. There’s at least 16 covariates, 2 stratifications, and 3 full models tested (that they report). By the statistical-significance filter, a HR of 0.67 will be a serious exaggeration (because only exaggerated estimates would - just barely - reach p=0.05 on this small dataset with only 121 deaths). We can rule out a HR of 0.67 as credible simply on a priori grounds: no exercise RCT has ever shown reductions in all-cause mortality remotely like that, and that’s the sort of reduction you just don’t see outside of miracle drugs for lethal diseases (for example, aspirin and vitamin D have RRs of >0.95).

-

“The Difference Between ‘Significant’ and ‘Not Significant’ is Not Itself Statistically Significant” (http://www.stat.columbia.edu/~gelman/research/published/signif4.pdf): the difference between aerobic exercise and resistance exercise is not statistically-significant in this study. The HR in model 1 for aerobic exercise is (0.63-1.32), and for aerobic exercise, (0.46-0.99). That is, the confidence intervals overlap. (Specifically, comparing the proportion of aerobic exercisers who died with the resistance exercisers who died, I get

prop.test(c(39,75), c(1251,1746))= p=0.12; to compute a survival curve I would need more data, I think.) The study itself does not anywhere seem to directly compare aerobic with resistance but always works in a stratified setting; I don’t know if they don’t realize this point about the null hypotheses they’re testing, or if they did do the logrank test and it came out non-statistically-significant and they quietly dropped it from the paper. -

the fallacy of controlling for intermediate variables: in the models they fit, they include as covariates “body mass index, current smoking (yes or no), heavy drinking (yes or no), hypertension (present or not), diabetes (present or not), hypercholesterolemia (yes or no), and parental history of cancer (yes or no).” This makes no sense. Both resistance exercise and aerobic exercise will themselves influence BMI, smoking status, hypertension, diabetes, and hypercholesterolemia. What does it mean to estimate the correlation of exercise with health which excludes all impact it has on your health through BMI, blood pressure, etc? You might as well say, ‘controlling for muscle percentage and body fat, we find weight lifting has no estimated benefits’, or ‘controlling for education, we find no benefits to IQ’ or ‘controlling for local infection rates, we find no mortality benefits to public vaccination’. This makes the results particularly nonsensical for the aerobic estimates if you want to interpret them as direct causal estimates - at most, the HR estimates here are an estimate of weird indirect effects (‘the remaining effect of exercise after removing all effects mediated by the covariates’). Unfortunately, structural equation models and Bayesian networks are a lot harder to use and justify than just dumping a list of covariates into your survival analysis package, so expect to see a lot more controlling for intermediate variables in the future.

The first three are sufficient to show you should not draw any strong conclusions, the latter two are nasty and could be problematic but can be avoided. These concerns are roughly ranked by importance: #1 puts a low ceiling on how much confidence in causality we could ever derive, a ceiling I informally put at ~33%; #2 is important because it shows that very little of the sampling error has been overcoming; #3 means we know the estimate is exaggerated; #4 is not important, because while that misinterpretation is tempting and the authors do nothing to stop the reader from making it, there’s still enough data in the paper that you can correct for it easily by doing your own proportion test; #5 could be an important criticism if anyone was relying heavily on the estimate contaminated by the covariates but in this case the raw proportions of deaths is what yields the headlines, so I bring this up to explain why we should ignore model 3’s estimate of aerobic exercise’s RR=1. This sort of problem is why one should put more weight on meta-analyses of RCTs - for example, “Progressive resistance strength training for improving physical function in older adults” https://onlinelibrary.wiley.com/enhanced/doi/10.1002/14651858.CD002759.pub2

So to summarize: this study collected the wrong kind of data for comparing mortality reduction from aerobics vs weightlifting, insufficient mortality data to result in strong evidence, exaggerates the result through p-hacking, did not actually compare aerobics and weightlifting head to head, and the analysis’s implicit assumptions would ignore much of any causal effects of aerobics/weightlifting!

Moxibustion Mouse Study

http://www.eurekalert.org/pub_releases/2013-12/nrr-pam120513.php … “Pre-moxibustion and moxibustion prevent Alzheimer’s disease” … http://www.sjzsyj.org/CN/article/downloadArticleFile.do?attachType=PDF&id=754

I don’t believe this for a second. But actually, this would be a nice followup to my previous email about the problems in animal research: this paper exhibits all the problems mentioned, and more. Let’s do a little critique here.

-

This paper is Chinese research performed in China by an all-Chinese team. The current state of Chinese research is bad. It’s really bad. Some reading on the topic:

-

https://web.archive.org/web/20131203150612/https://www.wired.co.uk/news/archive/2013-12/02/china-academic-scandal

-

https://newhumanist.org.uk/2365/lies-damn-lies-and-chinese-science

-

https://news.bbc.co.uk/2/hi/8448731.stm

-

https://gwern.net/doc/statistics/bias/2010-zhang.pdf

-

https://www.nytimes.com/2010/10/07/world/asia/07fraud.html

-

https://news.bbc.co.uk/1/hi/world/asia-pacific/4755861.stm

-

https://news.bbc.co.uk/2/hi/asia-pacific/8442147.stm

-

https://www.nature.com/articles/463142a

-

https://www.sciencenews.org/view/generic/id/330930/title/Traditional_Chinese_medicine_Big_questions

-

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0020185

-

https://www.npr.org/2011/08/03/138937778/plagiarism-plague-hinders-chinas-scientific-ambition

I will note that there have been statistical anomalies in some of the Chinese papers on dual n-back training I have used in my meta-analysis, so I have some personal experience in the topic. #. ‘traditional medicine’ research is really bad no matter where you go. They mention acupuncture as justification? That’s just fantastic. https://en.wikipedia.org/wiki/Acupuncture punctures some of the hyperbolic claims, the PLOS link above deals with the poor-quality of the Chinese reviews & meta-analyses in general, and Cochrane is not too kind to acupuncture: https://www.thecochranelibrary.com/details/collection/691705/Acupuncture-ancient-tradition-meets-modern-science.html And in many of the reviews/meta-analyses there are stark geographic differences where the East Asian studies turn in tons of positive results while the Western studies somehow… don’t. #. The lead author is not an ordinary neuroscientist or doctor, but works at the “College of Acupuncture and Moxibustion”. Is he really going to publish a study concluding “moxibustion does not affect Alzheimer’s”⸮ Really⸮ #. Does this claim even make sense? Moxibustion, really⸮ For those not familiar, https://en.wikipedia.org/wiki/Moxibustion entails

> Suppliers usually age the mugwort and grind it up to a fluff;practitioners burn the fluff or process it further into a cigar-shaped stick. They can use it indirectly, with acupuncture needles, or burn it on the patient’s skin.

How on earth is this supposed to help AD? How does burning a planton your skin affect plaques in your brain? Or if they use acupuncture needles, how plausible is it that a few milligrams at most of mugwort inserted into the skin would do anything? While Wikipedia is not Cochrane or anything, it is troubling that this entry lists no useful application of moxibustion. And then it goes and links to “Does moxibustion work? An overview of systematic reviews” https://www.biomedcentral.com/1756-0500/3/284 which finds that

> Ten SRs met our inclusion criteria, which related to thefollowing conditions: cancer, ulcerative colitis, stroke rehabilitation, constipation, hypertension, pain conditions and breech presentation. Their conclusions were contradictory in several instances. Relatively clear evidence emerged to suggest that moxibustion is effective for breech presentation.

That review also mentions, incidentally, that

> Many of the primary moxibustion trials originate from China(data not shown); Vickers et al. demonstrated that virtually 100% of Chinese acupuncture trials are positive [ /doc/statistics/bias/1998-vickers.pdf], which seems to be equally applied to moxibustion, an acupuncture-like intervention. This casts considerable doubt on the reliability of these studies.

Alright, so let’s take stock here. Without ever looking beyond the title and authorship, we have found that this is a paper from a country with infamously bad research, in a field with infamously bad research quality, led by a researcher with considerable inherent conflict of interest, using a technique/substance which has already been linked with biased research, on a hypothesis that is grossly implausible. Based on all these base rates, we can say that there is basically zero chance this result will ever replicate, much less to other mice strains or even to humans.

It seems unfair to reject the paper out of hand, though, so let’s look at the actual paper a little.

Forty healthy rats were randomly divided into four groups: control group, model group, moxibustion group and pre-moxibustion group. The latter three groups were treated with intracerebral injection of Aβ1–42 to establish an AD-like pathology. The moxibustion group received suspended moxibustion on Baihui and Shenshu acupoints for 14 days after Aβ1–42 injection. The pre-moxibustion group was treated with moxibustion for eight courses (each course lasting for 6 days) prior to the exposure and 14 days after Aβ1–42 exposure. The final analysis incorporated all rats.

From the materials and methods:

Male Wistar rats (12 months old; 500 ± 20 g), of specific pathogen free grade, were obtained from the Experimental Animal Center of Huazhong University of Science and Technology (Wuhan, China), with license No. SCXK (E) 2008-0005.

After the hair around the acupoints was shaved, an ignited moxa-stick (diameter 6 mm; Nanyang Shennong Aaicao Appliance Company, Nanyang, Henan China; a round long stick made of moxa floss, also called moxa roll), was suspended perpendicularly 2 cm above the acupoints. Baihui (located in the middle of the parietal bone[50]) and Shenshu (located under the second lumbar on both sides [50]) acupoints were simultaneously given suspended moxibustion. Each treatment consisted of a 15-minute moxibustion, keeping the spot warm and red but not burnt. Generally, the skin temperature was kept at 43 ± 1° during the moxibustion procedure.

Right away we can spot 3 of the usual animal research methodological problems:

-

the sample size is too small - at n=10 rats in each group, you are not going to detect anything without large effect sizes. It is implausible that suspended moxa has any effects, and it is especially implausible that the effect sizes would be large.

-

there is no mention of blinding. The technicians or research assistants or whomever clearly know which mice they are dealing with.

-

there is mention of randomization, but it’s not specified how the randomization was done, which means it probably was done by the ‘stick your hand in and grab’ method, and probably does not balance by litter or other variables. This massively worsens the power problem, see “Design, power, and interpretation of studies in the standard murine model of ALS” http://www.researchals.org/uploaded_files/ALS%202008%209%204.pdf

(I’m a little curious about whether they really started with 10 mice in each group: the mice spent at least 60 days in the experiment and I wonder how many, out of 40, you would expect to die in that time period, especially after you’ve done your level best to give 3⁄4s of them Alzheimer’s disease during that time.)

I also note that the moxibustion situation is even worse than I thought: they did not use acupuncture needles to get some mugwort into the mice, they did not put any moxa/mugwort in physical contact, but instead the burning was 2cm away from the mice! The mechanism was bad, but it just got worse.

There’s no mention of the data being provided anywhere at all, either their website or the publisher; there’s some evidence that providing access to a paper’s data correlates with higher-quality research, so I mention this absence. It also makes it harder for me to do anything more complex like a post hoc power analysis.

Moving on, they list as dependent variables:

-

Morris water maze navigation test

-

Morris water maze spatial probe test

-

apoptosis rate of hippocampal neurons

Let’s look at the stats a bit.

-

Significance: The paper lists no less* than 14 p-values (4 < 0.05, the rest < 0.01), and for all of them uses an alpha of 0.05. The smallest given constraint is p<0.01. A Bonferroni correction on this would be 0.05/14 (since they must have done at least 14 tests to report 14 p-values), which means an alpha of 0.003571. But 0.01 > 0.05/14, so the 4 0.05 p-values disappear under multiple correction and probably most of the 0.01s would too.

-

this is a lower bound since the Morris diagrams report something like 20 p-values themselves, but I didn’t feel like carefully parsing the text to figure out exactly how many p-values are being reported

-

-

Effect sizes: no tables are provided, but figure 2 (the second Morris maze test) is illustrative. The control mice have no problem remember where the platform used to be, and so spend almost half a minute (~24s) in the right area searching for it. Makes sense, they don’t have AD. The AD mice have terrible memory, and so only spend ~6s in the right area and most of their time in the wrong place. Also makes sense. Now, what about the AD mice who had some moxa burnt 2cm away from their skin? They spend 14-16s or more than twice and almost 3 times as much as the non-moxa AD mice! And the claimed standard error on all 4 group of mice’s time is tiny, maybe 1s eyeballing the graph. So they are claiming, in this point, to have an effect size on memory of something like d = (15-6)/1 = 9. Insane! From burning some mugwort 2cm away from the mice’s skin‽

-

Power: actually, that result shows an example of what I mean by the result being absurd. Let’s calculate what that effect size implies for the power of their t-test comparing the model AD mice with the moxa AD mice. So let’s say the 2 moxa groups equate to n=20 15(1.5), and the AD controls were then n=10 5(0.5). The pooled standard deviation of the non-moxa and moxa mice is sqrt(((20-1)(1.5^2) + (10-1)(0.5^2)) / (20 + 10 - 2)) = 1.267, so the effect size was actually d=(15-5)/1.267 = 7.89. With 20 mice in 1 group and 10 mice in the other, an alpha of 0.05, then our power turns out to be…

library(pwr) pwr.t2n.test(n1 = 20, n2 = 10, d = 7.89, sig.level = 0.05) t test power calculation n1 = 20 n2 = 10 d = 7.89 sig.level = 0.05 power = 1

A power of 100%. Absurd. Have you ever seen animal research (or Alzheimer’s research…) with such high power? Real effects, real treatments, in large clinical trials or in meta-analyses, are hardly ever that high.

So. I don’t know how they got the results they got. Did they administer dozens of tests until they got the results they wanted? Did they simply make up the data like so many Chinese academics have? Or did they start with 30 mice in each group and cherrypick the best/worst 10? Did they abuse the model AD mice to make the AD+moxa mice look good?

In conclusion: this paper is complete bullshit, will not replicate.

“Someone Should Do Something”: Wishlist of Miscellaneous Project Ideas

Split out to list of ideas.

Estimating Censored Test Scores

An acquaintance asks the following question: he is applying for an university course which requires a certain minimum score on a test for admittance, and wonders about his chances and a possible trend of increasing minimum scores over time. (He hasn’t received his test results yet.) The university doesn’t provide a distribution of admittee scores, but it does provide the minimum scores for 2005-2013, unless all applicants were admitted because they all scored above an unknown cutoff—in which case it provides no minimum score. This leads to the dataset:

2005,NA

2006,410

2007,NA

2008,NA

2009,398

2010,407

2011,417

2012,NA

2013,NAA quick eyeball tells us that we can’t conclude much: only 4 actual datapoints, with 5 hidden from us. We can’t hope to conclude anything about time trends, other than there doesn’t seem to be much of one: the last score, 417, is not much higher than 410, and the last two scores are low enough to be hidden. We might be able to estimate a mean, though.

We can’t simply average the 4 scores and conclude the mean minimum is 410 because of those NAs: a number of scores have been ‘censored’ because they were too low, and while we don’t know what they were, we do know they were <398 (the smallest score) and so a bunch of <398s will pull down the uncensored mean of 410.

On approach is to treat it as a Tobit model and estimate using something like the censReg library (overview).

But if we try a quick call to censReg, we are confounded: a Tobit model expects you to provide the cutoff below which the observations were censored, but that is something we don’t know. All we know is that it must be below 398, we weren’t told it was exactly 395, 394, etc. Fortunately, this is a solved problem. For example: “The Tobit model with a non-zero threshold”, 2007 tells us:

In this paper, we consider estimating the unknown censoring threshold by the minimum of the uncensored yi’s. We show that the estimator γ’ of γ is superconsistent and asymptotically exponentially distributed. Carson (1988, 1989) also suggests estimating the unknown censoring threshold by the minimum of the uncensored yi’s. In a recent paper, Zuehlke (2003) rediscovers these unpublished results and demonstrates via simulations that the asymptotic distribution of the maximum likelihood estimator does not seem to be affected by the estimation of the censoring threshold.

That seems to be almost too simple and easy, but it makes sense and reminds me a little of the German tank problem: the minimum might not be that accurate a guess (it’s unlikely you just happened to draw a sample right on the censoring threshold) and it definitely can’t be wrong in the sense of being too low. (A Bayesian method might be able to do better with a prior like an exponential.)

With that settled, the analysis is straightforward: load the data, figure out the minimum score, set the NAs to 0, regress, and extract the model estimates for each year:

scores <- data.frame(Year=2005:2013,

MinimumScore=c(NA,410,NA,NA,398,407,417,NA,NA));

censorThreshold <- min(scores$MinimumScore, na.rm=T)

scores[is.na(scores)] <- 0

library(censReg)

## 'censorThreshold-1' because censReg seems to treat threshold as < and not <=

summary(censReg(MinimumScore ~ Year, left=censorThreshold-1, data=scores))

# Warning message:

# In censReg(MinimumScore ~ Year, left = censorThreshold - 1, data = scores) :

# at least one value of the endogenous variable is smaller than the left limit

#

# Call:

# censReg(formula = MinimumScore ~ Year, left = censorThreshold -

# 1, data = scores)

#

# Observations:

# Total Left-censored Uncensored Right-censored

# 9 5 4 0

#

# Coefficients:

# Estimate Std. error t value Pr(> t)

# (Intercept) -139.9711 Inf 0 1

# Year 0.2666 Inf 0 1

# logSigma 2.6020 Inf 0 1

#

# Newton-Raphson maximisation, 37 iterations

# Return code 1: gradient close to zero

# Log-likelihood: -19.35 on 3 Df

-139.9711 + (0.2666 * scores$Year)

# [1] 394.6 394.8 395.1 395.4 395.6 395.9 396.2 396.4 396.7With so little data the results aren’t very reliable, but there is one observation we can make.

The fact that half the dataset is censored tells us that the uncensored mean may be a huge overestimate (since we’re only looking at the ‘top half’ of the underlying data), and indeed it is. The original mean of the uncensored scores was 410; however, the estimate including the censored data is much lower, 397 (13 less)!

This demonstrates the danger of ignoring systematic biases in your data.

So, trying to calculate a mean or time effect is not helpful. What might be better is to instead exploit the censoring directly: if the censoring happened because everyone got in, then if you showed up in a censored year, you have 100% chance of getting in; while in a non-censored year you have an unknown but <100% chance of getting in; so the probability of a censored year sets a lower bound on one’s chances, and this is easy to calculate as a simple binomial problem—5 out of 9 years were censored years, so:

binom.test(c(5,4))

#

# Exact binomial test

#

# data: c(5, 4)

# number of successes = 5, number of trials = 9, p-value = 1

# alternative hypothesis: true probability of success is not equal to 0.5

# 95% confidence interval:

# 0.212 0.863

# sample estimates:

# probability of success

# 0.5556So we can tell him that he may have a >55% chance of getting in.

The Traveling Gerontologist Problem

A quick probability exercise: Wikipedia mentions Finland has 566 centenarians as of 2010.

That’s few enough you could imagine visiting them all to research them and their longevity, in a sort of traveling salesman problem but with gerontologists instead. Except, because of the exponential increase in mortality, centenarians have high annual mortality rates; it depends on the exact age but you could call it >30% (eg. Finnish 99yos in 2012 had a death toll of 326.54/1000). So you might well try to visit a centenarian and discover they’d died before you got there.

How bad a risk is this? Well, if the risk per year is 30%, then one has a 70% chance of surviving a year. To survive a year, you must survive all 365 days; by the multiplication rule, the risk is x where or 0.7 = x365.25; solving, x = 0.999024.

It takes time to visit a centenarian—it wouldn’t do to be abrupt and see them for only a few minutes, you ought to listen to their stories, and you need to get to a hotel or airport, so let’s assume you visit 1 centenarian per day.

If you visit centenarian A on day 1, and you want to visit centenarian B on day 2, then you can count on a 99.9% chance B is still alive. So far so good. And if you wanted to visit 566 centenarians (let’s imagine you have a regularly-updated master list of centenarians from the Finnish population registry), then you only have to beat the odds 566 times in a row, which is not that hard: 0.999024566 = 0.5754023437943274.

But that’s coldblooded of you to objectify those Finnish centenarians! “Any centenarian will do, I don’t care.” What if you picked the current set of 566 centenarians and wanted to visit just them, specifically—with no new centenarians introduced to the list to replace any dead ones.

That’s a little more complicated. When you visit the first centenarian, it’s the same probability: 0.999024. When you visit the second centenarian the odds change since now she (and it’s more often ‘she’ than ‘he’, since remember the exponential and males having shorter mean lifetimes) has to survive 2 days, so it’s or 0.9990242; for the third, it’s 0.9990243, and so on to #566 who has been patiently waiting and trying to survive a risk of 0.999024566, and then you need to multiply to get your odds of beating every single risk of death and the centenarian not leaving for a more permanent rendezvous: , which would be , or in Haskell:

product (map (\x -> 0.999024**x) [1..566])

→ 8.952743340164081e-69(A little surprisingly, Wolfram Alpha can solve the TeX expression too.)

Given the use of floating point in that function (567 floating point exponentiations followed by as many multiplications) and the horror stories about floating point, one might worry the answer is wrong & the real probability is much larger. We can retry with an implementation of computable reals, CReal, which can be very slow but should give more precise answers:

:module + Data.Number.CReal

showCReal 100 (product (map (\x -> 0.999024**x) [1..566]))

→ 0.0000000000000000000000000000000000000000000000000000000000000000000089527433401308585720915431195262Looks good—agrees with the floating point version up to the 11th digit:

8.9527433401 64081e-69

8.9527433401 308585720915431195262We can also check by rewriting the product equation to avoid all the exponentiation and multiplication (which might cause issues) in favor of a single exponential:

-

(as before)

-

= (since )

-

= (by arithmetic progression/Gauss’s famous classroom trick since )

-

= (start substituting in specific values)

-

=

-

= 0.999024160461

So:

0.999024^160461

→ 8.95274334014924e-69Or to go back to the longer version:

0.999024**((566*(1 + 566)) / 2)

→ 8.952743340164096e-69Also close. All probabilities of success are minute.

How fast would you have to be if you wanted to at least try to accomplish the tour with, say, a 50-50 chance?

Well, that’s easy: you can consider the probability of all of them surviving one day and as we saw earlier, that’s 0.999024566 = 0.58, and two days would be So you can only take a little over a day before you’ve probabilistically lost & one of them has died; if you hit all 566 centenarians in 24 hours, that’s ~24 centenarians per hour or ~2 minutes to chat with each one and travel to the next. If you’re trying to collect DNA samples, better hope they’re all awake and able to give consent!

So safe to say, you will probably not be able to manage the Traveling Gerontologist’s tour.

Bayes Nets

Daily Weight Data Graph

As the datasets I’m interested in grow in number of variables, it becomes harder to justify doing analysis by simply writing down a simple linear model with a single dependent variable and throwing in the independent variables and maybe a few transformations chosen by hand. I can instead write down some simultaneous-equations/structural-equation-models, but while it’s usually obvious what to do for k < 4 and if it’s not I can compare the possible variants, 4 variables is questionable what the right SEM is, and >5, it’s hopeless. Factor analysis to extract some latent variables is a possibility, but the more general solution here seems to be probabilistic graphical models such as Bayesian networks.

I thought I’d try out some Bayes net inference on some of my datasets. In this case, I have ~150 daily measurements from my Omron body composition scale, measuring total weight, body fat percentage, and some other things (see an Omron manual):

-

Total weight

-

BMI

-

Body fat percentage

-

Muscle percentage

-

Resting metabolism in calories

-

“Body age”

-

Visceral fat index

The 7 variables are interrelated, so this is definitely a case where a simple lm is not going to do the trick. It’s also not 100% clear how to set up a SEM; some definitions are obvious (the much-criticized BMI is going to be determined solely by total weight, muscle and fat percentage might be inversely related) but others are not (how does “visceral fat” relate to body fat?). And it’s not a hopelessly small amount of data.

The Bayes net R library I’m trying out is bnlearn (paper).

library(bnlearn)

# https://www.dropbox.com/s/4nsrszm85m47272/2015-03-22-gwern-weight.csv

weight <- read.csv("selfexperiment/weight.csv")

weight$Date <- NULL; weight$Weight.scale <- NULL

# remove missing data

weightC <- na.omit(weight)

# bnlearn can't handle integers, oddly enough

weightC <- as.data.frame(sapply(weightC, as.numeric))

summary(weightC)

# Weight.Omron Weight.BMI Weight.body.fat Weight.muscle

# Min. :193.0000 Min. : 26.90000 Min. :27.00000 Min. :32.60000

# 1st Qu.:195.2000 1st Qu.: 27.20000 1st Qu.:28.40000 1st Qu.:34.20000

# Median :196.4000 Median : 27.40000 Median :28.70000 Median :34.50000

# Mean :196.4931 Mean : 28.95409 Mean :28.70314 Mean :34.47296

# 3rd Qu.:197.8000 3rd Qu.: 27.60000 3rd Qu.:29.10000 3rd Qu.:34.70000

# Max. :200.6000 Max. : 28.00000 Max. :31.70000 Max. :35.50000

# Weight.resting.metabolism Weight.body.age Weight.visceral.fat

# Min. :1857.000 Min. :52.00000 Min. : 9.000000

# 1st Qu.:1877.000 1st Qu.:53.00000 1st Qu.:10.000000

# Median :1885.000 Median :53.00000 Median :10.000000

# Mean :1885.138 Mean :53.32704 Mean : 9.949686

# 3rd Qu.:1893.000 3rd Qu.:54.00000 3rd Qu.:10.000000

# Max. :1914.000 Max. :56.00000 Max. :11.000000

cor(weightC)

# Weight.Omron Weight.BMI Weight.body.fat Weight.muscle

# Weight.Omron 1.00000000000 0.98858376919 0.1610643221 -0.06976934825

# Weight.BMI 0.98858376919 1.00000000000 0.1521872557 -0.06231142104

# Weight.body.fat 0.16106432213 0.15218725566 1.0000000000 -0.98704369855

# Weight.muscle -0.06976934825 -0.06231142104 -0.9870436985 1.00000000000

# Weight.resting.metabolism 0.96693236051 0.95959140245 -0.0665001241 0.15621294274

# Weight.body.age 0.82581939626 0.81286141659 0.5500409365 -0.47408608681

# Weight.visceral.fat 0.41542744168 0.43260100665 0.2798756916 -0.25076619829

# Weight.resting.metabolism Weight.body.age Weight.visceral.fat

# Weight.Omron 0.9669323605 0.8258193963 0.4154274417

# Weight.BMI 0.9595914024 0.8128614166 0.4326010067

# Weight.body.fat -0.0665001241 0.5500409365 0.2798756916

# Weight.muscle 0.1562129427 -0.4740860868 -0.2507661983

# Weight.resting.metabolism 1.0000000000 0.7008354776 0.3557229425

# Weight.body.age 0.7008354776 1.0000000000 0.4840752389

# Weight.visceral.fat 0.3557229425 0.4840752389 1.0000000000

## create alternate dataset expressing the two percentage variables as pounds, since this might fit better

weightC2 <- weightC

weightC2$Weight.body.fat <- weightC2$Weight.Omron * (weightC2$Weight.body.fat / 100)

weightC2$Weight.muscle <- weightC2$Weight.Omron * (weightC2$Weight.muscle / 100)Begin analysis:

pdap <- hc(weightC)

pdapc2 <- hc(weightC2)

## bigger is better:

score(pdap, weightC)

# [1] -224.2563072

score(pdapc2, weightC2)

# [1] -439.7811072

## stick with the original, then

pdap

# Bayesian network learned via Score-based methods

#

# model:

# [Weight.Omron][Weight.body.fat][Weight.BMI|Weight.Omron]

# [Weight.resting.metabolism|Weight.Omron:Weight.body.fat]

# [Weight.body.age|Weight.Omron:Weight.body.fat]

# [Weight.muscle|Weight.body.fat:Weight.resting.metabolism][Weight.visceral.fat|Weight.body.age]

# nodes: 7

# arcs: 8

# undirected arcs: 0

# directed arcs: 8

# average markov blanket size: 2.57

# average neighbourhood size: 2.29

# average branching factor: 1.14

#

# learning algorithm: Hill-Climbing

# score: BIC (Gauss.)

# penalization coefficient: 2.534452101

# tests used in the learning procedure: 69

# optimized: TRUE

plot(pdap)

## https://i.imgur.com/nipmqta.pngThis inferred graph is obviously wrong in several respects, violating prior knowledge about some of the relationships.

More specifically, my prior knowledge:

-

Weight.Omron== total weight; should be influenced byWeight.body.fat(%),Weight.muscle(%), &Weight.visceral.fat -

Weight.visceral.fat: ordinal variable, <=9 = normal; 10-14 = high; 15+ = very high; from the Omron manual:Visceral fat area (0—approx. 300 cm , 1 inch=2.54 cm) distribution with 30 levels. NOTE: Visceral fat levels are relative and not absolute values.

-

Weight.BMI: BMI is a simple function of total weight & height (specificallyBMI = round(weight / height^2)), so it should be influenced only byWeight.Omron, and influence nothing else -

Weight.body.age: should be influenced byWeight.Omron,Weight.body.fat, andWeight.muscle, based on the description in the manual:Body age is based on your resting metabolism. Body age is calculated by using your weight, body fat percentage and skeletal muscle percentage to produce a guide to whether your body age is above or below the average for your actual age.

-

Weight.resting.metabolism: a function of the others, but I’m not sure which exactly; manual talks about what resting metabolism is generically and specifies it has the range “385 to 3999 kcal with 1 kcal increments”; https://en.wikipedia.org/wiki/Basal_metabolic_rate suggests the Omron may be using one of several approximation equations based on age/sex/height/weight, but it might also be using lean body mass as well.

Unfortunately, bnlearn doesn’t seem to support any easy way of encoding the prior knowledge—for example, you can’t say ‘no outgoing arrows from node X’—so I iterate, adding bad arrows to the blacklist.

Which arrows violate prior knowledge?

-

[Weight.visceral.fat|Weight.body.age](read backwards, asWeight.body.age → Weight.visceral.fat) -

[Weight.muscle|Weight.resting.metabolism]

Retry, blacklisting those 2 arrows:

pdap2 <- hc(weightC, blacklist=data.frame(from=c("Weight.body.age", "Weight.resting.metabolism"), to=c("Weight.visceral.fat","Weight.muscle")))New violations:

-

[Weight.visceral.fat|Weight.BMI] -

[Weight.muscle|Weight.Omron]

pdap3 <- hc(weightC, blacklist=data.frame(from=c("Weight.body.age", "Weight.resting.metabolism", "Weight.BMI", "Weight.Omron"), to=c("Weight.visceral.fat","Weight.muscle", "Weight.visceral.fat", "Weight.muscle")))New violations:

-

[Weight.visceral.fat|Weight.Omron] -

[Weight.muscle|Weight.BMI]

pdap4 <- hc(weightC, blacklist=data.frame(from=c("Weight.body.age", "Weight.resting.metabolism", "Weight.BMI", "Weight.Omron", "Weight.Omron", "Weight.BMI"), to=c("Weight.visceral.fat","Weight.muscle", "Weight.visceral.fat", "Weight.muscle", "Weight.visceral.fat", "Weight.muscle")))One violation:

-

[Weight.muscle|Weight.body.age]

pdap5 <- hc(weightC, blacklist=data.frame(from=c("Weight.body.age", "Weight.resting.metabolism", "Weight.BMI", "Weight.Omron", "Weight.Omron", "Weight.BMI", "Weight.body.age"), to=c("Weight.visceral.fat","Weight.muscle", "Weight.visceral.fat", "Weight.muscle", "Weight.visceral.fat", "Weight.muscle", "Weight.muscle")))

# Bayesian network learned via Score-based methods

#

# model:

# [Weight.body.fat][Weight.muscle|Weight.body.fat][Weight.visceral.fat|Weight.body.fat]

# [Weight.Omron|Weight.visceral.fat][Weight.BMI|Weight.Omron]

# [Weight.resting.metabolism|Weight.Omron:Weight.body.fat]

# [Weight.body.age|Weight.Omron:Weight.body.fat]

# nodes: 7

# arcs: 8

# undirected arcs: 0

# directed arcs: 8

# average markov blanket size: 2.57

# average neighbourhood size: 2.29

# average branching factor: 1.14

#

# learning algorithm: Hill-Climbing

# score: BIC (Gauss.)

# penalization coefficient: 2.534452101

# tests used in the learning procedure: 62

# optimized: TRUE

plot(pdap5)

## https://i.imgur.com/nxCfmYf.png

## implementing all the prior knowledge cost ~30:

score(pdap5, weightC)

# [1] -254.6061724No violations, so let’s use the network and estimate the specific parameters:

fit <- bn.fit(pdap5, weightC); fit

# Bayesian network parameters

#

# Parameters of node Weight.Omron (Gaussian distribution)

#

# Conditional density: Weight.Omron | Weight.visceral.fat

# Coefficients:

# (Intercept) Weight.visceral.fat

# 169.181651376 2.744954128

# Standard deviation of the residuals: 1.486044472

#

# Parameters of node Weight.BMI (Gaussian distribution)

#

# Conditional density: Weight.BMI | Weight.Omron

# Coefficients:

# (Intercept) Weight.Omron

# -0.3115772322 0.1411044216

# Standard deviation of the residuals: 0.03513413381

#

# Parameters of node Weight.body.fat (Gaussian distribution)

#

# Conditional density: Weight.body.fat

# Coefficients:

# (Intercept)

# 28.70314465

# Standard deviation of the residuals: 0.644590085

#

# Parameters of node Weight.muscle (Gaussian distribution)

#

# Conditional density: Weight.muscle | Weight.body.fat

# Coefficients:

# (Intercept) Weight.body.fat

# 52.1003347352 -0.6141270921

# Standard deviation of the residuals: 0.06455478599

#

# Parameters of node Weight.resting.metabolism (Gaussian distribution)

#

# Conditional density: Weight.resting.metabolism | Weight.Omron + Weight.body.fat

# Coefficients:

# (Intercept) Weight.Omron Weight.body.fat

# 666.910582196 6.767607964 -3.886694779

# Standard deviation of the residuals: 1.323176507

#

# Parameters of node Weight.body.age (Gaussian distribution)

#

# Conditional density: Weight.body.age | Weight.Omron + Weight.body.fat

# Coefficients:

# (Intercept) Weight.Omron Weight.body.fat

# -32.2651379176 0.3603672788 0.5150134225

# Standard deviation of the residuals: 0.2914301529

#

# Parameters of node Weight.visceral.fat (Gaussian distribution)

#

# Conditional density: Weight.visceral.fat | Weight.body.fat

# Coefficients:

# (Intercept) Weight.body.fat

# 6.8781100009 0.1070118125

# Standard deviation of the residuals: 0.2373649058

## residuals look fairly good, except for Weight.resting.metabolism, where there are some extreme residuals in what looks a bit like a sigmoid sort of pattern, suggesting nonlinearities in the Omron scale's formula?

bn.fit.qqplot(fit)

## https://i.imgur.com/mSallOv.pngWe can double-check the estimates here by turning the Bayes net model into a SEM and seeing how the estimates compare, and also seeing if the p-values suggest we’ve found a good model:

library(lavaan)

Weight.model1 <- '

Weight.visceral.fat ~ Weight.body.fat

Weight.Omron ~ Weight.visceral.fat

Weight.BMI ~ Weight.Omron

Weight.body.age ~ Weight.Omron + Weight.body.fat

Weight.muscle ~ Weight.body.fat

Weight.resting.metabolism ~ Weight.Omron + Weight.body.fat

'

Weight.fit1 <- sem(model = Weight.model1, data = weightC)

summary(Weight.fit1)

# lavaan (0.5-16) converged normally after 139 iterations

#

# Number of observations 159

#

# Estimator ML

# Minimum Function Test Statistic 71.342

# Degrees of freedom 7

# P-value (Chi-square) 0.000

#

# Parameter estimates:

#

# Information Expected

# Standard Errors Standard

#

# Estimate Std.err Z-value P(>|z|)

# Regressions:

# Weight.visceral.fat ~

# Weight.bdy.ft 0.107 0.029 3.676 0.000

# Weight.Omron ~

# Wght.vscrl.ft 2.745 0.477 5.759 0.000

# Weight.BMI ~

# Weight.Omron 0.141 0.002 82.862 0.000

# Weight.body.age ~

# Weight.Omron 0.357 0.014 25.162 0.000

# Weight.bdy.ft 0.516 0.036 14.387 0.000

# Weight.muscle ~

# Weight.bdy.ft -0.614 0.008 -77.591 0.000

# Weight.resting.metabolism ~

# Weight.Omron 6.730 0.064 104.631 0.000

# Weight.bdy.ft -3.860 0.162 -23.837 0.000

#

# Covariances:

# Weight.BMI ~~

# Weight.body.g -0.000 0.001 -0.116 0.907

# Weight.muscle -0.000 0.000 -0.216 0.829

# Wght.rstng.mt 0.005 0.004 1.453 0.146

# Weight.body.age ~~

# Weight.muscle 0.001 0.001 0.403 0.687

# Wght.rstng.mt -0.021 0.030 -0.700 0.484

# Weight.muscle ~~

# Wght.rstng.mt 0.007 0.007 1.003 0.316

#

# Variances:

# Wght.vscrl.ft 0.056 0.006

# Weight.Omron 2.181 0.245

# Weight.BMI 0.001 0.000

# Weight.body.g 0.083 0.009

# Weight.muscle 0.004 0.000

# Wght.rstng.mt 1.721 0.193Comparing the coefficients by eye, they tend to be quite close (usually within 0.1) and the p-values are all statistically-significant.

The network itself looks right, although some of the edges are surprises: I didn’t know visceral fat was predictable from body fat (I thought they were measuring separate things), and the relative independence of muscle suggests that in any exercise plan I might be better off focusing on the body fat percentage rather than the muscle percentage since the former may be effectively determining the latter.

So what did I learn here?

-

learning network structure and direction of arrows is hard; even with only 7 variables and n = 159 (accurate clean data), the hill-climbing algorithm will learn at least 7 wrong arcs.

-

and the derived graphs depend disturbingly heavily on choice of algorithm; I used the

hchill-climbing algorithm (since I’m lazy and didn’t want to specify arrow directions), but when I try out the alternatives likeiambon the same data & blacklist, the found graph looks rather different

-

-

Gaussians are, as always, sensitive to outliers: I was surprised the first graph didn’t show BMI connected to anything, so I took a closer look and found I had miscoded a BMI of 28 as 280!

-

bnlearn, while not as hard to use as I expected, could still use usability improvements: I should not need to coerce integer data into exactly equivalent numeric types just becausebnlearndoesn’t recognize integers; and blacklisting/whitelisting needs to be more powerful—iteratively generating graphs and manually inspecting and manually blacklisting is tedious and does not scale-

hence, it may make more sense to find a graph using

bnlearnand then convert it into simultaneous-equations and manipulate it using more mature SEM libraries

-

Zeo Sleep Data

Here I look at my Zeo sleep data; more variables, more complex relations, and more unknown ones, but on the positive side, ~12x more data to work with.

zeo <- read.csv("~/wiki/doc/zeo/gwern-zeodata.csv")

zeo$Sleep.Date <- as.Date(zeo$Sleep.Date, format="%m/%d/%Y")

## convert "05/12/2014 06:45" to "06:45"

zeo$Start.of.Night <- sapply(strsplit(as.character(zeo$Start.of.Night), " "), function(x) { x[2] })

## convert "06:45" to 24300

interval <- function(x) { if (!is.na(x)) { if (grepl(" s",x)) as.integer(sub(" s","",x))

else { y <- unlist(strsplit(x, ":")); as.integer(y[[1]])*60 + as.integer(y[[2]]); }

}

else NA

}

zeo$Start.of.Night <- sapply(zeo$Start.of.Night, interval)

## correct for the switch to new unencrypted firmware in March 2013;

## I don't know why the new firmware subtracts 15 hours

zeo[(zeo$Sleep.Date >= as.Date("2013-03-11")),]$Start.of.Night <- (zeo[(zeo$Sleep.Date >= as.Date("2013-03-11")),]$Start.of.Night + 900) %% (24*60)

## after midnight (24*60=1440), Start.of.Night wraps around to 0, which obscures any trends,

## so we'll map anything before 7AM to time+1440

zeo[zeo$Start.of.Night<420 & !is.na(zeo$Start.of.Night),]$Start.of.Night <- (zeo[zeo$Start.of.Night<420 & !is.na(zeo$Start.of.Night),]$Start.of.Night + (24*60))

zeoSmall <- subset(zeo, select=c(ZQ,Total.Z,Time.to.Z,Time.in.Wake,Time.in.REM,Time.in.Light,Time.in.Deep,Awakenings,Start.of.Night,Morning.Feel))

zeoClean <- na.omit(zeoSmall)

# bnlearn doesn't like the 'integer' class that most of the data-frame is in

zeoClean <- as.data.frame(sapply(zeoClean, as.numeric))Prior knowledge:

-

Start.of.Nightis temporally first, and cannot be caused -

Time.to.Zis temporally second, and can be influenced byStart.of.Night(likely a connection between how late I go to bed and how fast I fall asleep) &Time.in.Wake(since if it takes 10 minutes to fall asleep, I must spend ≥10 minutes in wake) but not others -

Morning.Feelis temporally last, and cannot cause anything -

ZQis a synthetic variable invented by Zeo according to an opaque formula, which cannot cause anything but is determined by others -

Total.Zshould be the sum ofTime.in.Light,Time.in.REM, andTime.in.Deep -

Awakeningsshould have an arrow withTime.in.Wakebut it’s not clear which way it should run

library(bnlearn)

## after a bunch of iteration, blacklisting arrows which violate the prior knowledge

bl <- data.frame(from=c("Morning.Feel", "ZQ", "ZQ", "ZQ", "ZQ", "ZQ", "ZQ", "Time.in.REM", "Time.in.Light", "Time.in.Deep", "Morning.Feel", "Awakenings", "Time.in.Light", "Morning.Feel", "Morning.Feel","Total.Z", "Time.in.Wake", "Time.to.Z", "Total.Z", "Total.Z", "Total.Z"),

to=c("Start.of.Night", "Total.Z", "Time.in.Wake", "Time.in.REM", "Time.in.Deep", "Morning.Feel","Start.of.Night", "Start.of.Night","Start.of.Night","Start.of.Night", "Time.to.Z", "Time.to.Z", "Time.to.Z", "Total.Z", "Time.in.Wake","Time.to.Z","Time.to.Z", "Start.of.Night", "Time.in.Deep", "Time.in.REM", "Time.in.Light"))

zeo.hc <- hc(zeoClean, blacklist=bl)

zeo.iamb <- iamb(zeoClean, blacklist=bl)

## problem: undirected arc: Time.in.Deep/Time.in.REM; since hc inferred [Time.in.Deep|Time.in.REM], I'll copy that for iamb:

zeo.iamb <- set.arc(zeo.iamb, from = "Time.in.REM", to = "Time.in.Deep")

zeo.gs <- gs(zeoClean, blacklist=bl)

## same undirected arc:

zeo.gs <- set.arc(zeo.gs, from = "Time.in.REM", to = "Time.in.Deep")

## Bigger is better:

score(zeo.iamb, data=zeoClean)

# [1] -44776.79185

score(zeo.gs, data=zeoClean)

# [1] -44776.79185

score(zeo.hc, data=zeoClean)

# [1] -44557.6952

## hc scores best, so let's look at it:

zeo.hc

# Bayesian network learned via Score-based methods

#

# model:

# [Start.of.Night][Time.to.Z|Start.of.Night][Time.in.Light|Time.to.Z:Start.of.Night]

# [Time.in.REM|Time.in.Light:Start.of.Night][Time.in.Deep|Time.in.REM:Time.in.Light:Start.of.Night]

# [Total.Z|Time.in.REM:Time.in.Light:Time.in.Deep][Time.in.Wake|Total.Z:Time.to.Z]

# [Awakenings|Time.to.Z:Time.in.Wake:Time.in.REM:Time.in.Light:Start.of.Night]

# [Morning.Feel|Total.Z:Time.to.Z:Time.in.Wake:Time.in.Light:Start.of.Night]

# [ZQ|Total.Z:Time.in.Wake:Time.in.REM:Time.in.Deep:Awakenings]

# nodes: 10

# arcs: 28

# undirected arcs: 0

# directed arcs: 28

# average markov blanket size: 7.40

# average neighbourhood size: 5.60

# average branching factor: 2.80

#

# learning algorithm: Hill-Climbing

# score: BIC (Gauss.)

# penalization coefficient: 3.614556939

# tests used in the learning procedure: 281

# optimized: TRUE

plot(zeo.hc)

## https://i.imgur.com/nD3LXND.png

fit <- bn.fit(zeo.hc, zeoClean); fit

#

# Bayesian network parameters

#

# Parameters of node ZQ (Gaussian distribution)

#