Anime Neural Net Graveyard

Post-mortems of failed neural network experiments in generating anime images, pre-StyleGAN/BigGAN.

My experiments in generating anime faces, tried periodically since 201511ya, succeeded in 2019 with the release of StyleGAN. But for comparison, here are the failures from some of my older GAN or other NN attempts; as the quality is worse than StyleGAN, I won’t bother going into details in these post-mortems—creating the datasets & training the ProGAN & tuning & transfer-learning were all much the same as already outlined at length for the StyleGAN results.

Included are:

ProGAN

Glow

MSG-GAN

PokeGAN

Self-Attention-GAN-TensorFlow

VGAN

BigGAN unofficial

BigGAN-TensorFlow

BigGAN-PyTorch

(official BigGAN)

GAN-QP

WGAN

IntroVAE

Examples of anime faces generated by neural networks have wowed weebs across the world. Who could not be impressed by TWDNE samples like these:

64 TWDNE face samples selected from social media, in an 8×8 grid.

They are colorful, well-drawn, near-flawless, and attractive to look at. This page is not about them. This page is about… the others—the failed experiments that came before.

GAN

ProGAN

Using et al 2017’s official implementation:

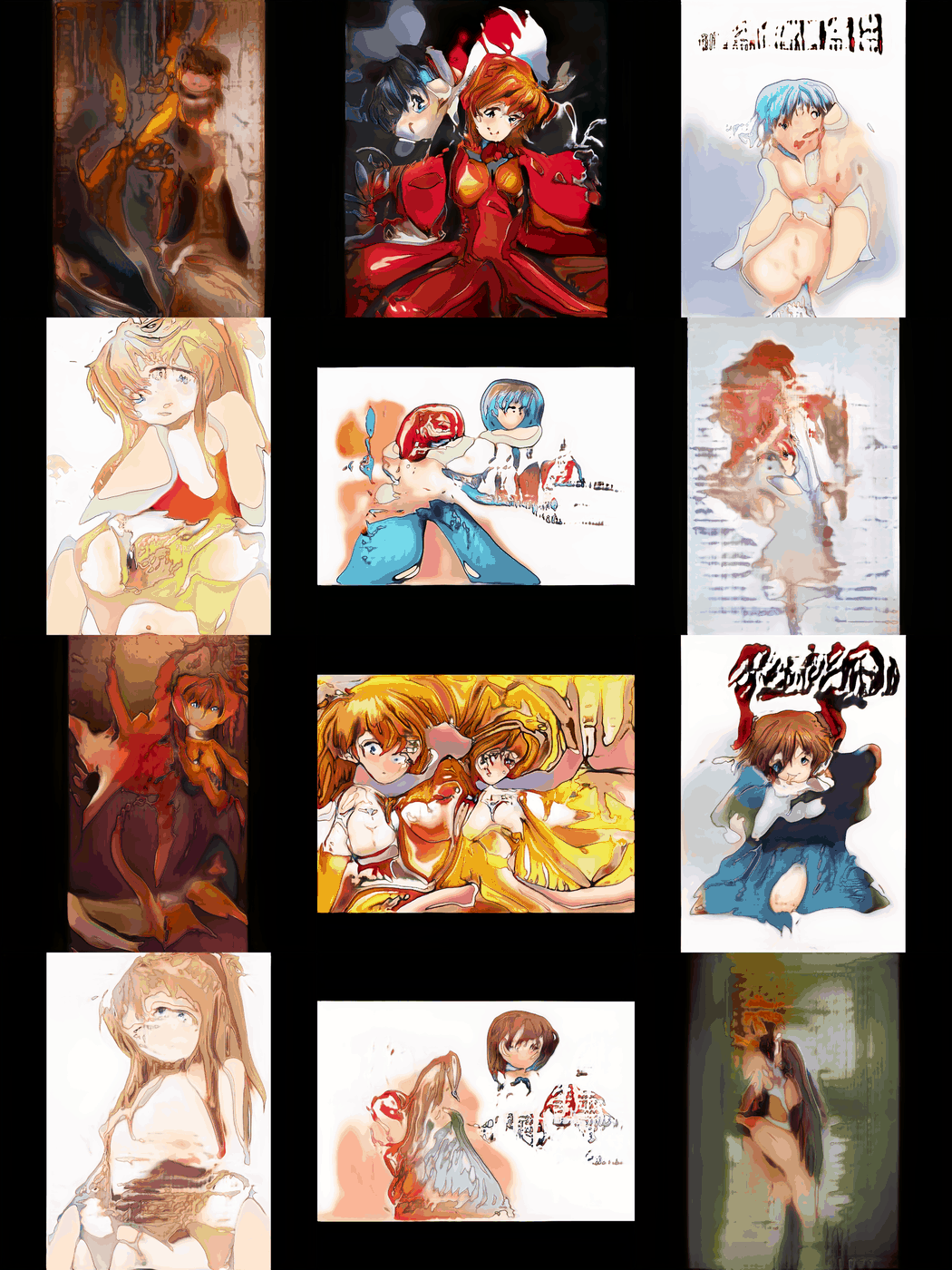

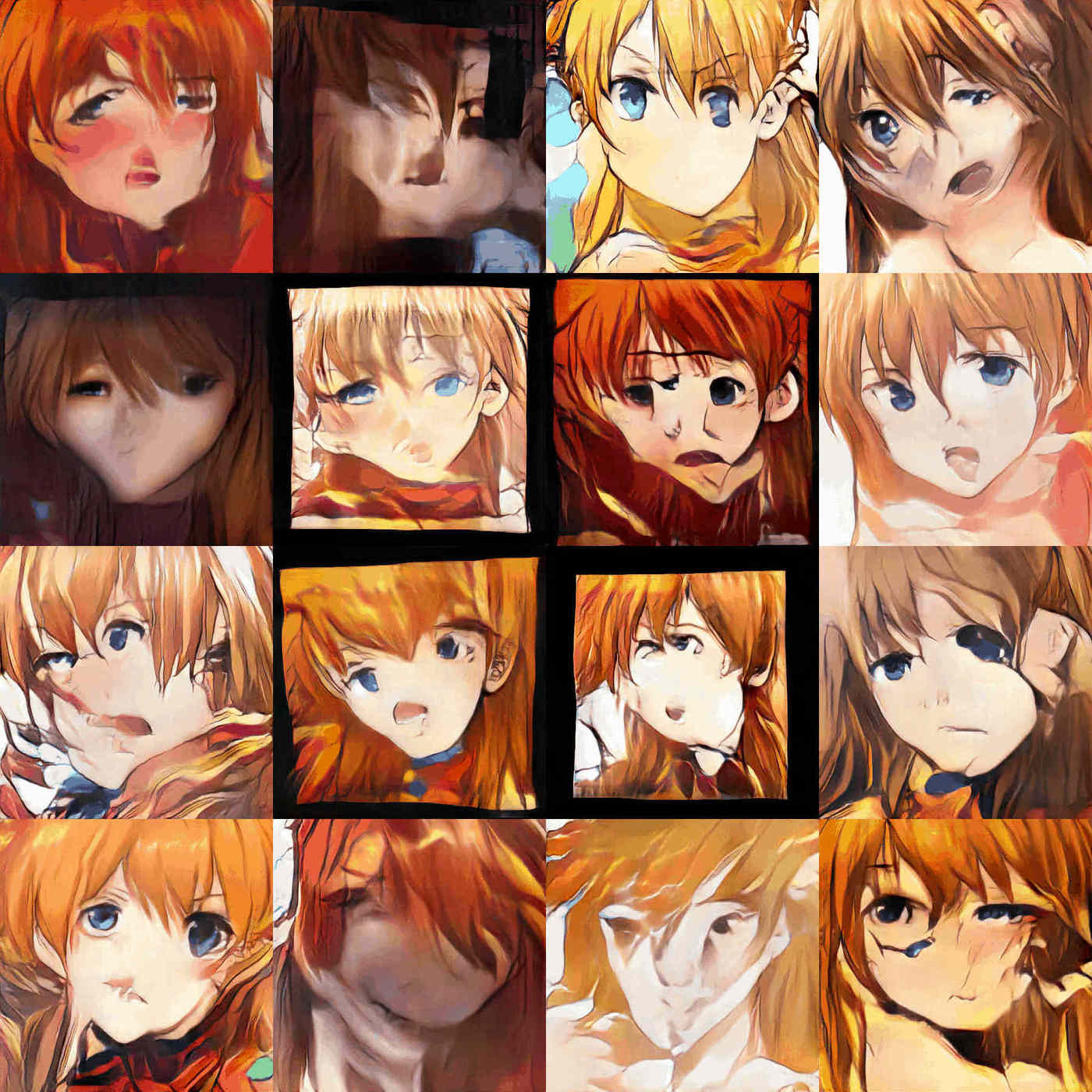

2018-09-08, 512–1024px whole-Asuka images ProGAN samples:

1024px, whole-Asuka images, ProGAN

512px whole-Asuka images, ProGAN

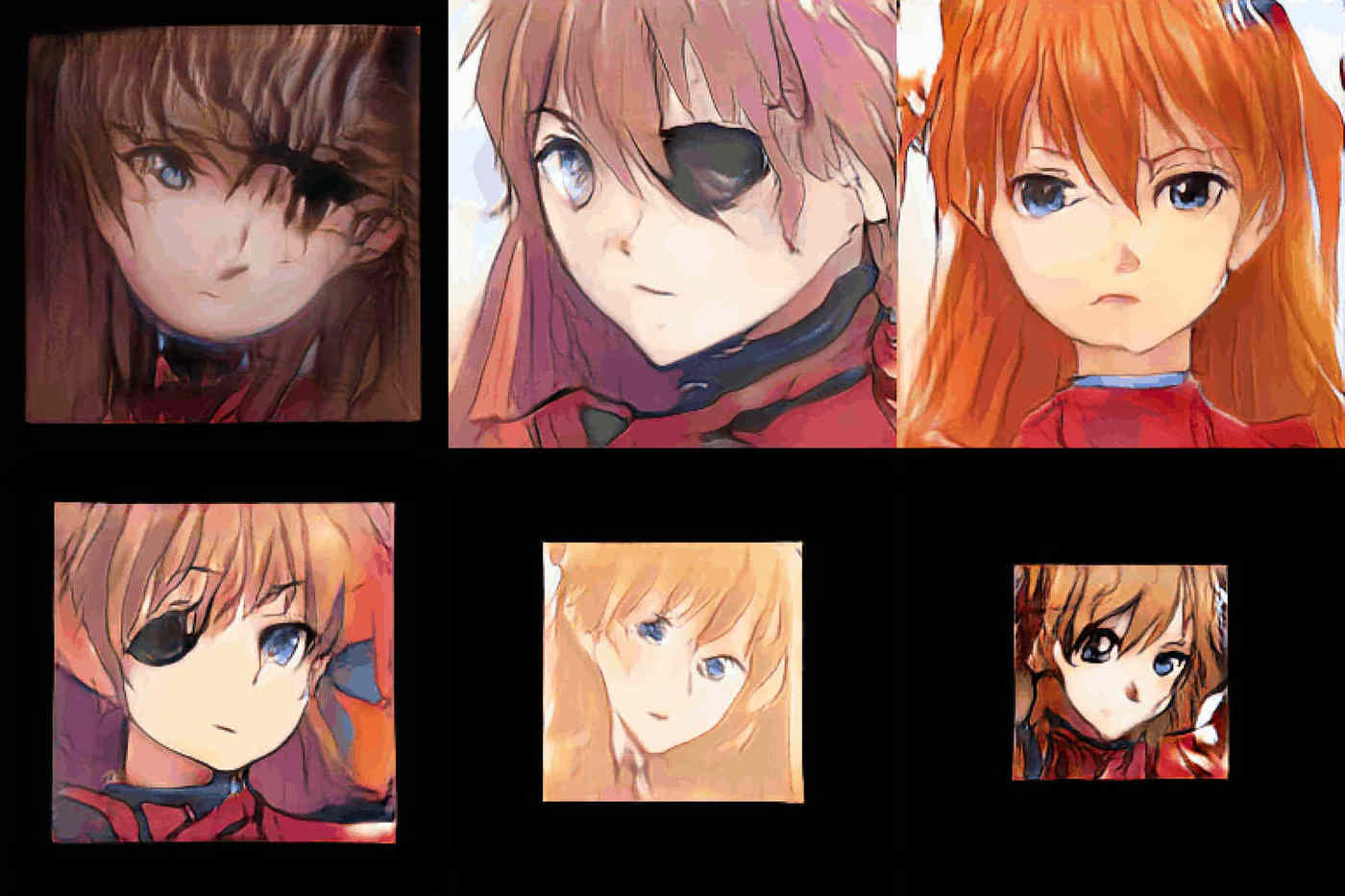

2018-09-18, 512px Asuka faces, ProGAN samples:

512px Asuka faces, ProGAN

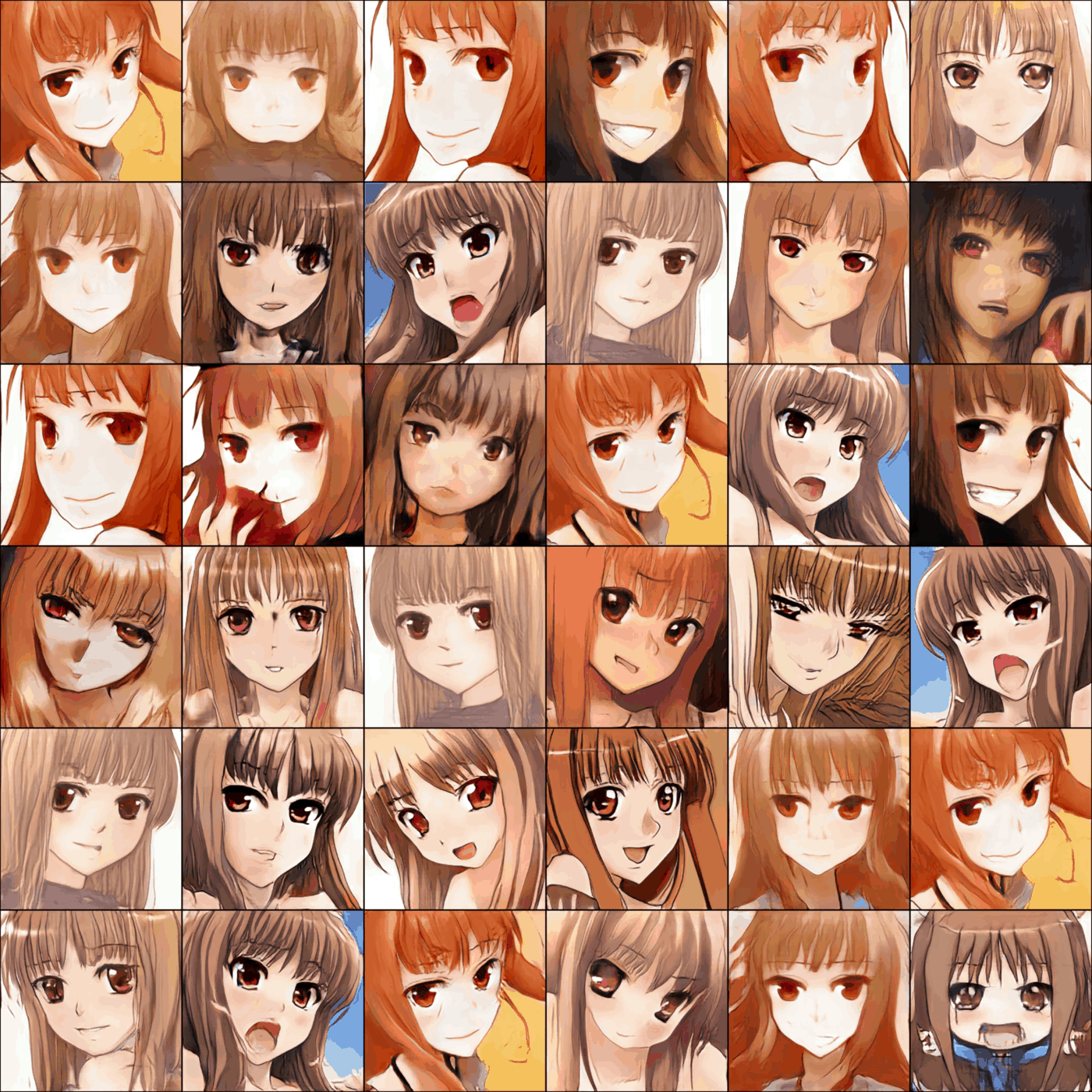

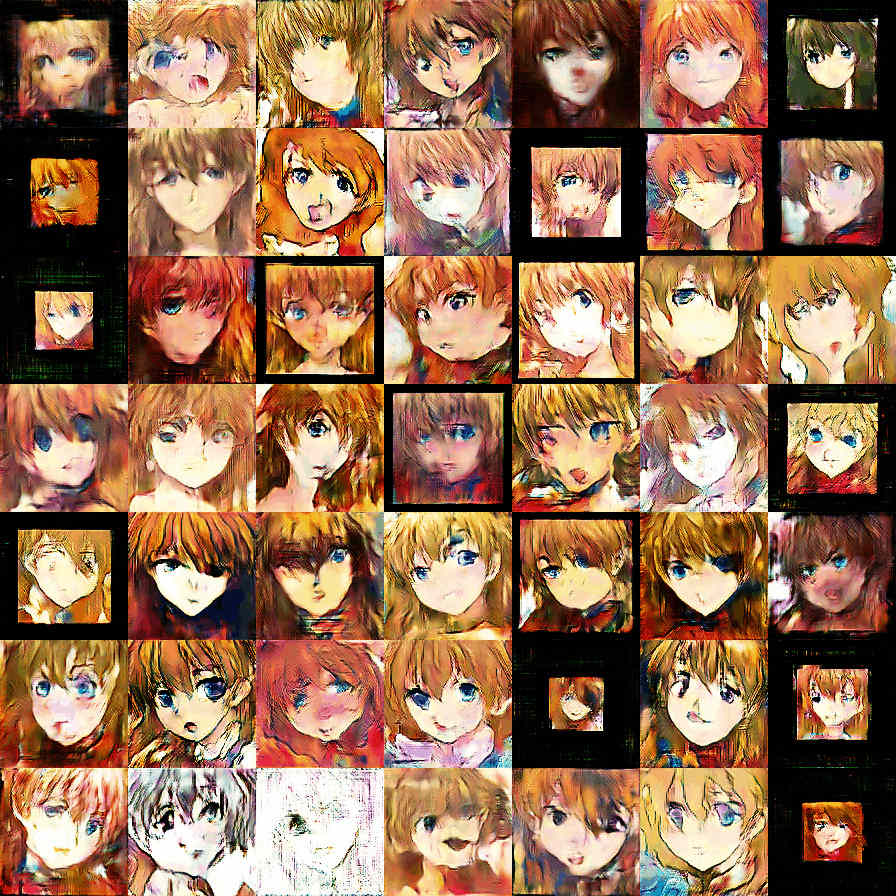

2018-10-29, 512px Holo faces, ProGAN:

Random samples of 512px ProGAN Holo faces

After generating ~1k Holo faces, I selected the top decile (n = 103) of the faces (Imgur mirror):

512px ProGAN Holo faces, random samples from top decile (6×6)

The top decile images are, nevertheless, showing distinct signs of both artifacting & overfitting/memorization of data points. Another 2 weeks proved this out further:

ProGAN samples of 512px Holo faces, after badly overfitting (iteration #10,325)

Interpolation video of the October 2018 512px Holo face ProGAN; note the gross overfitting indicated by the abruptness of the interpolations jumping from face (mode) to face (mode) and lack of meaningful intermediate faces in addition to the overall blurriness & low visual quality. 2019-01-17, Danbooru2017 512px SFW images, ProGAN:

512px SFW Danbooru2017, ProGAN

2019-02-05 (stopped in order to train with the new StyleGAN codebase), the 512px anime face dataset used elsewhere, ProGAN:

512px anime faces, ProGAN

Interpolation video of the 2018-02-05 512px anime face ProGAN; while the image quality is low, the diversity is good & shows no overfitting/memorization or blatant mode collapse Downloads:

MSG-GAN

MSG-GAN official implementation:

2018-12-15, 512px Asuka faces, failure case

PokeGAN

nshepperd’s (unpublished) multi-scale GAN with self-attention layers, spectral normalization, and a few other tweaks:

PokeGAN, Asuka faces, 2018-11-16

Self-Attention-GAN-TensorFlow

SAGAN did not have an official implementation released at the time so I used the Junho Kim implementation; 128px SAGAN, WGAN-LP loss, on Asuka faces & whole Asuka images:

Self-Attention-GAN-TensorFlow, whole Asuka, 2019-08-18

Self-Attention-GAN-TensorFlow, Asuka faces, 2019-09-13

VGAN

The official VGAN code for et al 2018 had not been released when I began trying VGAN, so I used akanimax’s implementation.

The variational discriminator bottleneck, along with self-attention layers and progressive growing, is one of the few strategies which permit 512px images, and I was intrigued to see that it worked relatively well, although I ran into persistent issues with instability & mode collapse. I suspect that VGAN could’ve worked better than it did with some more work.

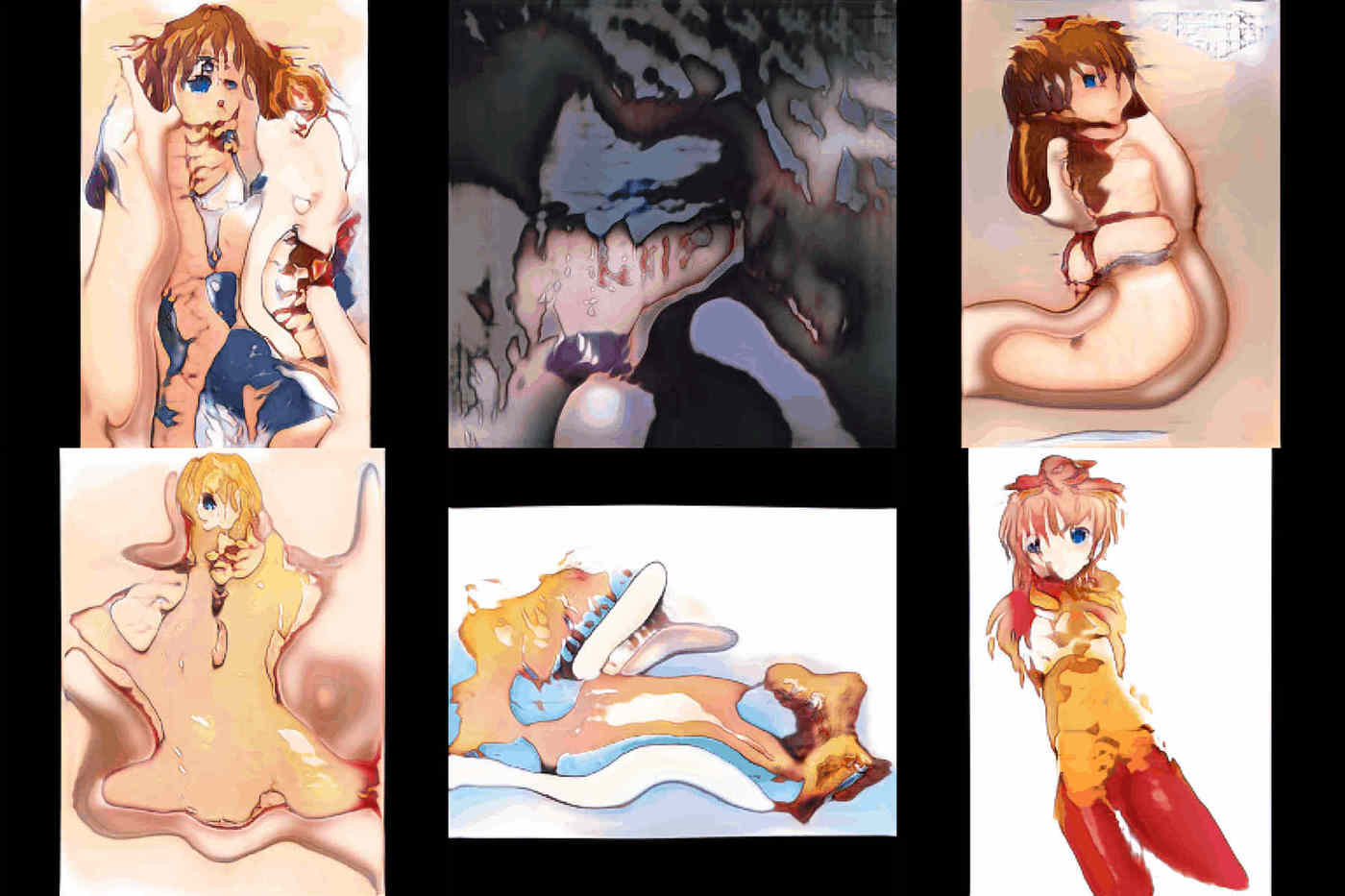

akanimax VGAN, anime faces, 2018-12-25

BigGAN Unofficial

et al 2018^s official implementation & models were not released until late March 2019 (nor the semi-official compare_gan implementation until February 2019), and I experimented with 2 unofficial implementations in late 2018–early 2019.

BigGAN-TensorFlow

Junho Kim implementation; 128px spectral norm hinge loss, anime faces:

Kim BigGAN-PyTorch, anime faces, 2019-01-17

This one never worked well at all, and I am still puzzled what went wrong.

BigGAN-PyTorch

Aaron Leong’s PyTorch BigGAN implementation (not the official BigGAN implementation). As it’s class-conditional, I faked having 1000 classes by constructing a variant anime face dataset: taking the top 1000 characters by tag count in the Danbooru2017 metadata, I then filtered for those character tags 1 by 1, and copied them & cropped faces into matching subdirectories 1–1000. This let me try out both faces & whole images. I also attempted to hack in gradient accumulation for big minibatches to make it a true BigGAN implementation, but didn’t help too much; the problem here might simply have been that I couldn’t run it long enough.

Results upon abandoning:

Leong BigGAN-PyTorch, 1000-class anime character dataset, 2018-11-30 (#314,000)

Leong BigGAN-PyTorch, 1000-class anime face dataset, 2018-12-24 (#1,006,320)

GAN-QP

Implementation of 2018:

GAN-QP, 512px Asuka faces, 2018-11-21

Training oscillated enormously, with all the samples closely linked and changing simultaneously. This was despite the checkpoint model being enormous (551MB) and I am suspicious that something was seriously wrong—either the model architecture was wrong (too many layers or filters?) or the learning rate was many orders of magnitude too large. Because of the small minibatch, progress was difficult to make in a reasonable amount of wallclock time, so I moved on.

WGAN

WGAN-GP official implementation; I did most of the early anime face work with WGAN on a different machine and didn’t keep copies. However, a sample from a short run gives an idea of what WGAN tended to look like on anime runs:

WGAN, 256px Asuka faces, iteration 2100

Normalizing Flow

Glow

Used Glow (2018) official implementation.

Due to the enormous model size (4.2GB), I had to modify Glow’s settings to get training working reasonably well, after extensive tinkering to figure out what any meant:

{"verbose": true, "restore_path": "logs/model_4.ckpt", "inference": false, "logdir": "./logs", "problem": "asuka",

"category": "", "data_dir": "../glow/data/asuka/", "dal": 2, "fmap": 1, "pmap": 16, "n_train": 20000, "n_test": 1000,

"n_batch_train": 16, "n_batch_test": 50, "n_batch_init": 16, "optimizer": "adamax", "lr": 0.0005, "beta1": 0.9,

"polyak_epochs": 1, "weight_decay": 1.0, "epochs": 1000000, "epochs_warmup": 10, "epochs_full_valid": 3,

"gradient_checkpointing": 1, "image_size": 512, "anchor_size": 128, "width": 512, "depth": 13, "weight_y": 0.0,

"n_bits_x": 8, "n_levels": 7, "n_sample": 16, "epochs_full_sample": 5, "learntop": false, "ycond": false, "seed": 0,

"flow_permutation": 2, "flow_coupling": 1, "n_y": 1, "rnd_crop": false, "local_batch_train": 1, "local_batch_test": 1,

"local_batch_init": 1, "direct_iterator": true, "train_its": 1250, "test_its": 63, "full_test_its": 1000, "n_bins": 256.0, "top_shape": [4, 4, 768]}

...

{"epoch": 5, "n_processed": 100000, "n_images": 6250, "train_time": 14496, "loss": "2.0090", "bits_x": "2.0090", "bits_y": "0.0000", "pred_loss": "1.0000"}An additional challenge was numerical instability in the reversing of matrices, giving rise to many ‘invertibility’ crashes.

Final sample before I looked up the compute requirements more carefully & gave up on Glow:

Glow, Asuka faces, 5 epoches (2018-08-02)

VAE

IntroVAE

A hybrid GAN-VAE architecture introduced in mid-2018 by “IntroVAE: Introspective Variational Autoencoders for Photographic Image Synthesis”, et al 2018, with the official PyTorch implementation released in April 2019, IntroVAE attempts to reuse the encoder-decoder for an adversarial loss as well, to combine the best of both worlds: the principled stable training & reversible encoder of the VAE with the sharpness & high quality of a GAN.

Quality-wise, they show IntroVAE works on CelebA & LSUN BEDROOM at up to 1024px resolution with results they claim are comparable to ProGAN. Performance-wise, for 512px, they give a runtime of 7 days with a minibatch n = 12, or presumably 4 GPUs (since their 1024px run script implies they used 4 GPUs and I can fit a minibatch of n = 4 onto 1×1080ti, so 4 GPUs would be consistent with n = 12), and so 28 GPU-days.

I adapted the 256px suggested settings for my 512px anime portraits dataset:

python main.py --hdim=512 --output_height=512 --channels='32, 64, 128, 256, 512, 512, 512' --m_plus=120 \

--weight_rec=0.05 --weight_kl=1.0 --weight_neg=0.5 --num_vae=0 \

--dataroot=/media/gwern/Data2/danbooru2018/portrait/1/ --trainsize=302652 --test_iter=1000 --save_iter=1 \

--start_epoch=0 --batchSize=4 --nrow=8 --lr_e=0.0001 --lr_g=0.0001 --cuda --nEpochs=500

# ...====> Cur_iter: [187060]: Epoch [3] (5,467/60,531): time: 142675: Rec: 19569, Kl_E: 162, 151, 121, Kl_G: 151, 121,There was a minor bug in the codebase where it would crash on trying to print out the log data, perhaps because it assumes multi-GPU and I was running on 1 GPU, and was trying to index into an array which was actually a simple scalar, which I fixed by removing the indexing:

- info += 'Rec: {:.4f}, '.format(loss_rec.data[0])

- info += 'Kl_E: {:.4f}, {:.4f}, {:.4f}, '.format(lossE_real_kl.data[0],

- lossE_rec_kl.data[0], lossE_fake_kl.data[0])

- info += 'Kl_G: {:.4f}, {:.4f}, '.format(lossG_rec_kl.data[0], lossG_fake_kl.data[0])

-

+

+ info += 'Rec: {:.4f}, '.format(loss_rec.data)

+ info += 'Kl_E: {:.4f}, {:.4f}, {:.4f}, '.format(lossE_real_kl.data,

+ lossE_rec_kl.data, lossE_fake_kl.data)

+ info += 'Kl_G: {:.4f}, {:.4f}, '.format(lossG_rec_kl.data, lossG_fake_kl.data)Sample results after ~1.7 GPU-days:

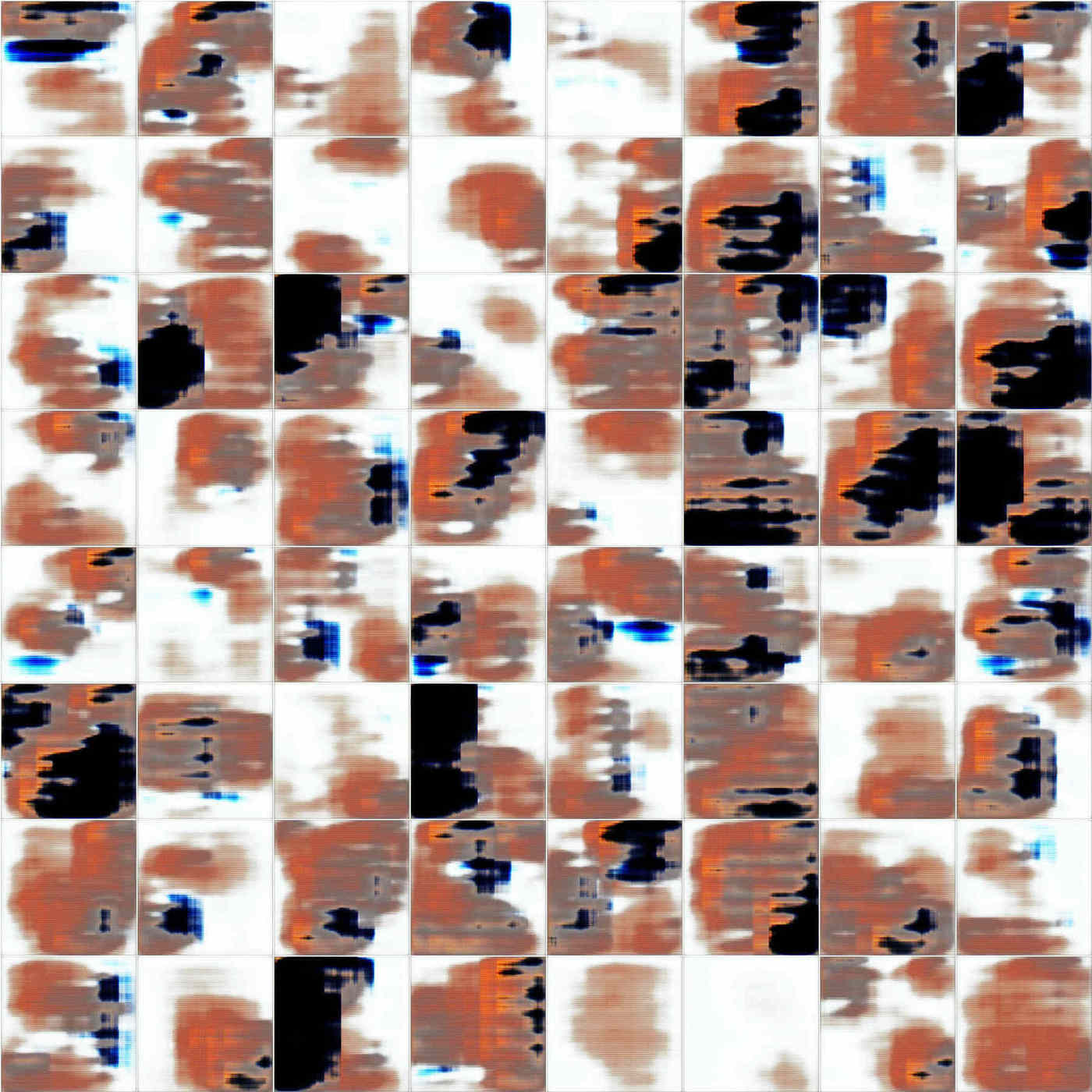

IntroVAE, 512px anime portrait (n = 4, 3 sets: real datapoints, encoded → decoded versions of the real datapoints, and random generated samples)

By this point, StyleGAN would have been generating recognizable faces from scratch, while the IntroVAE random samples are not even face-like, and the IntroVAE training curve was not improving at a notable rate. IntroVAE has some hyperparameters which could probably be tuned better for the anime portrait faces (they briefly discuss the use of the --num_vae option to run in classic VAE mode to let you tune the VAE-related hyperparameters before enabling the GAN-like part), but it should be fairly insensitive overall to hyperparameters and unlikely to help all that much. So IntroVAE probably can’t replace StyleGAN (yet?) for general-purpose image synthesis. This demonstrates again that it seems like everything works on CelebA these days and just because something works on a photographic dataset does not mean it’ll work on other datasets. Image generation papers should probably branch out some more and consider non-photographic tests.